Sqoop импортирует все таблицы MySQL в Hive

1. Импортируйте в базу данных Hive по умолчанию.

[root@node1 sqoop-1.4.7]# bin/sqoop-import-all-tables --connect jdbc:mysql://node1:3306/esdb --username root --password 123456 --hive-import --create-hive-table

Warning: /opt/sqoop-1.4.7/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

18/05/24 15:26:19 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/05/24 15:26:19 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/05/24 15:26:19 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

18/05/24 15:26:19 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

18/05/24 15:26:20 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/05/24 15:26:20 INFO tool.CodeGenTool: Beginning code generation

18/05/24 15:26:20 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 15:26:20 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 15:26:20 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/a38c87c70af413cbb1ec75d0b0f5ad34/files.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 15:26:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/a38c87c70af413cbb1ec75d0b0f5ad34/files.jar

18/05/24 15:26:22 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/05/24 15:26:22 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/05/24 15:26:22 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/05/24 15:26:22 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/05/24 15:26:22 INFO mapreduce.ImportJobBase: Beginning import of files

18/05/24 15:26:22 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/05/24 15:26:23 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/05/24 15:26:23 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 15:26:26 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 15:26:26 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `files`

18/05/24 15:26:26 INFO db.IntegerSplitter: Split size: 810; Num splits: 4 from: 1 to: 3244

18/05/24 15:26:26 INFO mapreduce.JobSubmitter: number of splits:4

18/05/24 15:26:26 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0015

18/05/24 15:26:26 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0015

18/05/24 15:26:26 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0015/

18/05/24 15:26:26 INFO mapreduce.Job: Running job: job_1526097883376_0015

18/05/24 15:26:33 INFO mapreduce.Job: Job job_1526097883376_0015 running in uber mode : false

18/05/24 15:26:33 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 15:26:39 INFO mapreduce.Job: map 25% reduce 0%

18/05/24 15:26:40 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 15:26:40 INFO mapreduce.Job: Job job_1526097883376_0015 completed successfully

18/05/24 15:26:41 INFO mapreduce.Job: Counters: 31

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=570260

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=412

HDFS: Number of bytes written=3799556

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Killed map tasks=1

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=16521

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=16521

Total vcore-milliseconds taken by all map tasks=16521

Total megabyte-milliseconds taken by all map tasks=16917504

Map-Reduce Framework

Map input records=3244

Map output records=3244

Input split bytes=412

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=274

CPU time spent (ms)=6610

Physical memory (bytes) snapshot=726429696

Virtual memory (bytes) snapshot=8493514752

Total committed heap usage (bytes)=437256192

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=3799556

18/05/24 15:26:41 INFO mapreduce.ImportJobBase: Transferred 3.6235 MB in 17.587 seconds (210.9803 KB/sec)

18/05/24 15:26:41 INFO mapreduce.ImportJobBase: Retrieved 3244 records.

18/05/24 15:26:41 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table files

18/05/24 15:26:41 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 15:26:41 INFO hive.HiveImport: Loading uploaded data into Hive

18/05/24 15:26:43 INFO hive.HiveImport:

18/05/24 15:26:43 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/opt/hive-1.2.2/lib/hive-exec-1.2.2.jar!/hive-log4j.properties

18/05/24 15:26:46 INFO hive.HiveImport: OK

18/05/24 15:26:46 INFO hive.HiveImport: Time taken: 1.753 seconds

18/05/24 15:26:46 INFO hive.HiveImport: Loading data to table default.files

18/05/24 15:26:47 INFO hive.HiveImport: Table default.files stats: [numFiles=4, totalSize=3799556]

18/05/24 15:26:47 INFO hive.HiveImport: OK

18/05/24 15:26:47 INFO hive.HiveImport: Time taken: 0.802 seconds

18/05/24 15:26:47 INFO hive.HiveImport: Hive import complete.

18/05/24 15:26:47 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

18/05/24 15:26:47 INFO tool.CodeGenTool: Beginning code generation

18/05/24 15:26:47 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `logs` AS t LIMIT 1

18/05/24 15:26:47 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/a38c87c70af413cbb1ec75d0b0f5ad34/logs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 15:26:48 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/a38c87c70af413cbb1ec75d0b0f5ad34/logs.jar

18/05/24 15:26:48 INFO mapreduce.ImportJobBase: Beginning import of logs

18/05/24 15:26:48 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 15:26:49 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 15:26:49 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `logs`

18/05/24 15:26:49 INFO db.IntegerSplitter: Split size: 0; Num splits: 4 from: 1 to: 1

18/05/24 15:26:49 INFO mapreduce.JobSubmitter: number of splits:1

18/05/24 15:26:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0016

18/05/24 15:26:50 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0016

18/05/24 15:26:50 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0016/

18/05/24 15:26:50 INFO mapreduce.Job: Running job: job_1526097883376_0016

18/05/24 15:26:58 INFO mapreduce.Job: Job job_1526097883376_0016 running in uber mode : false

18/05/24 15:26:58 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 15:27:05 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 15:27:05 INFO mapreduce.Job: Job job_1526097883376_0016 completed successfully

18/05/24 15:27:05 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=218298

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=99

HDFS: Number of bytes written=47

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4480

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4480

Total vcore-milliseconds taken by all map tasks=4480

Total megabyte-milliseconds taken by all map tasks=4587520

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=99

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=65

CPU time spent (ms)=1560

Physical memory (bytes) snapshot=182292480

Virtual memory (bytes) snapshot=2124431360

Total committed heap usage (bytes)=112197632

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=47

18/05/24 15:27:05 INFO mapreduce.ImportJobBase: Transferred 47 bytes in 16.8987 seconds (2.7813 bytes/sec)

18/05/24 15:27:05 INFO mapreduce.ImportJobBase: Retrieved 1 records.

18/05/24 15:27:05 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table logs

18/05/24 15:27:05 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `logs` AS t LIMIT 1

18/05/24 15:27:05 WARN hive.TableDefWriter: Column day had to be cast to a less precise type in Hive

18/05/24 15:27:05 INFO hive.HiveImport: Loading uploaded data into Hive

18/05/24 15:27:07 INFO hive.HiveImport:

18/05/24 15:27:07 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/opt/hive-1.2.2/lib/hive-exec-1.2.2.jar!/hive-log4j.properties

18/05/24 15:27:10 INFO hive.HiveImport: OK

18/05/24 15:27:10 INFO hive.HiveImport: Time taken: 1.809 seconds

18/05/24 15:27:10 INFO hive.HiveImport: Loading data to table default.logs

18/05/24 15:27:11 INFO hive.HiveImport: Table default.logs stats: [numFiles=1, totalSize=47]

18/05/24 15:27:11 INFO hive.HiveImport: OK

18/05/24 15:27:11 INFO hive.HiveImport: Time taken: 0.694 seconds

18/05/24 15:27:11 INFO hive.HiveImport: Hive import complete.

18/05/24 15:27:11 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

[root@node1 sqoop-1.4.7]# hive> show tables;

OK

files

logs

t1

Time taken: 0.064 seconds, Fetched: 5 row(s)

hive> select count(*) from files;

Query ID = root_20180524152738_798fe3b4-acf1-48a1-a90a-5060fe30269a

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1526097883376_0017, Tracking URL = http://ndoe1:8088/proxy/application_1526097883376_0017/

Kill Command = /opt/hadoop-2.7.5/bin/hadoop job -kill job_1526097883376_0017

Hadoop job information for Stage-1: number of mappers: 3; number of reducers: 1

2018-05-24 15:27:45,426 Stage-1 map = 0%, reduce = 0%

2018-05-24 15:27:51,784 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.4 sec

2018-05-24 15:27:59,017 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.39 sec

MapReduce Total cumulative CPU time: 8 seconds 390 msec

Ended Job = job_1526097883376_0017

MapReduce Jobs Launched:

Stage-Stage-1: Map: 3 Reduce: 1 Cumulative CPU: 8.39 sec HDFS Read: 3816408 HDFS Write: 5 SUCCESS

Total MapReduce CPU Time Spent: 8 seconds 390 msec

OK

3244

Time taken: 21.453 seconds, Fetched: 1 row(s)

hive> 2. Импортируйте в указанную базу данных Hive.

По умолчанию используется импорт в базу данных по умолчанию. Если вы хотите указать импорт в определенную базу данных, вы можете использовать параметр --hive-database.

hive> create database test;

OK

Time taken: 0.158 seconds

hive>[root@node1 sqoop-1.4.7]# bin/sqoop-import-all-tables --connect jdbc:mysql://node1:3306/esdb --username root --password 123456 --hive-import --hive-database test --create-hive-table

Warning: /opt/sqoop-1.4.7/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

18/05/24 16:00:05 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/05/24 16:00:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/05/24 16:00:06 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

18/05/24 16:00:06 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

18/05/24 16:00:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/05/24 16:00:06 INFO tool.CodeGenTool: Beginning code generation

18/05/24 16:00:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 16:00:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 16:00:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/3036daf1aa7539d081b68965b6d1b061/files.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 16:00:08 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/3036daf1aa7539d081b68965b6d1b061/files.jar

18/05/24 16:00:08 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/05/24 16:00:08 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/05/24 16:00:08 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/05/24 16:00:08 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/05/24 16:00:08 INFO mapreduce.ImportJobBase: Beginning import of files

18/05/24 16:00:08 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/05/24 16:00:09 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/05/24 16:00:10 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 16:00:12 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 16:00:12 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `files`

18/05/24 16:00:12 INFO db.IntegerSplitter: Split size: 810; Num splits: 4 from: 1 to: 3244

18/05/24 16:00:12 INFO mapreduce.JobSubmitter: number of splits:4

18/05/24 16:00:12 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0019

18/05/24 16:00:12 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0019

18/05/24 16:00:12 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0019/

18/05/24 16:00:12 INFO mapreduce.Job: Running job: job_1526097883376_0019

18/05/24 16:00:20 INFO mapreduce.Job: Job job_1526097883376_0019 running in uber mode : false

18/05/24 16:00:20 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 16:00:26 INFO mapreduce.Job: map 25% reduce 0%

18/05/24 16:00:27 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 16:00:27 INFO mapreduce.Job: Job job_1526097883376_0019 completed successfully

18/05/24 16:00:28 INFO mapreduce.Job: Counters: 31

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=570260

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=412

HDFS: Number of bytes written=3799556

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Killed map tasks=1

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=17383

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=17383

Total vcore-milliseconds taken by all map tasks=17383

Total megabyte-milliseconds taken by all map tasks=17800192

Map-Reduce Framework

Map input records=3244

Map output records=3244

Input split bytes=412

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=283

CPU time spent (ms)=7450

Physical memory (bytes) snapshot=732844032

Virtual memory (bytes) snapshot=8491610112

Total committed heap usage (bytes)=444596224

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=3799556

18/05/24 16:00:28 INFO mapreduce.ImportJobBase: Transferred 3.6235 MB in 18.5387 seconds (200.1492 KB/sec)

18/05/24 16:00:28 INFO mapreduce.ImportJobBase: Retrieved 3244 records.

18/05/24 16:00:28 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table files

18/05/24 16:00:28 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 16:00:28 INFO hive.HiveImport: Loading uploaded data into Hive

18/05/24 16:00:30 INFO hive.HiveImport:

18/05/24 16:00:30 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/opt/hive-1.2.2/lib/hive-exec-1.2.2.jar!/hive-log4j.properties

18/05/24 16:00:33 INFO hive.HiveImport: OK

18/05/24 16:00:33 INFO hive.HiveImport: Time taken: 1.786 seconds

18/05/24 16:00:33 INFO hive.HiveImport: Loading data to table test.files

18/05/24 16:00:34 INFO hive.HiveImport: Table test.files stats: [numFiles=4, totalSize=3799556]

18/05/24 16:00:34 INFO hive.HiveImport: OK

18/05/24 16:00:34 INFO hive.HiveImport: Time taken: 0.797 seconds

18/05/24 16:00:34 INFO hive.HiveImport: Hive import complete.

18/05/24 16:00:34 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

18/05/24 16:00:34 INFO tool.CodeGenTool: Beginning code generation

18/05/24 16:00:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `logs` AS t LIMIT 1

18/05/24 16:00:34 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/3036daf1aa7539d081b68965b6d1b061/logs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 16:00:35 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/3036daf1aa7539d081b68965b6d1b061/logs.jar

18/05/24 16:00:35 INFO mapreduce.ImportJobBase: Beginning import of logs

18/05/24 16:00:35 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 16:00:36 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 16:00:36 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `logs`

18/05/24 16:00:36 INFO db.IntegerSplitter: Split size: 0; Num splits: 4 from: 1 to: 1

18/05/24 16:00:36 INFO mapreduce.JobSubmitter: number of splits:1

18/05/24 16:00:36 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0020

18/05/24 16:00:36 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0020

18/05/24 16:00:36 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0020/

18/05/24 16:00:36 INFO mapreduce.Job: Running job: job_1526097883376_0020

18/05/24 16:00:44 INFO mapreduce.Job: Job job_1526097883376_0020 running in uber mode : false

18/05/24 16:00:44 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 16:00:51 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 16:00:51 INFO mapreduce.Job: Job job_1526097883376_0020 completed successfully

18/05/24 16:00:51 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=218298

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=99

HDFS: Number of bytes written=47

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4155

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4155

Total vcore-milliseconds taken by all map tasks=4155

Total megabyte-milliseconds taken by all map tasks=4254720

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=99

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=60

CPU time spent (ms)=1380

Physical memory (bytes) snapshot=183681024

Virtual memory (bytes) snapshot=2122481664

Total committed heap usage (bytes)=111149056

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=47

18/05/24 16:00:51 INFO mapreduce.ImportJobBase: Transferred 47 bytes in 15.9943 seconds (2.9385 bytes/sec)

18/05/24 16:00:51 INFO mapreduce.ImportJobBase: Retrieved 1 records.

18/05/24 16:00:51 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table logs

18/05/24 16:00:51 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `logs` AS t LIMIT 1

18/05/24 16:00:51 WARN hive.TableDefWriter: Column day had to be cast to a less precise type in Hive

18/05/24 16:00:51 INFO hive.HiveImport: Loading uploaded data into Hive

18/05/24 16:00:53 INFO hive.HiveImport:

18/05/24 16:00:53 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/opt/hive-1.2.2/lib/hive-exec-1.2.2.jar!/hive-log4j.properties

18/05/24 16:00:56 INFO hive.HiveImport: OK

18/05/24 16:00:56 INFO hive.HiveImport: Time taken: 1.805 seconds

18/05/24 16:00:56 INFO hive.HiveImport: Loading data to table test.logs

18/05/24 16:00:57 INFO hive.HiveImport: Table test.logs stats: [numFiles=1, totalSize=47]

18/05/24 16:00:57 INFO hive.HiveImport: OK

18/05/24 16:00:57 INFO hive.HiveImport: Time taken: 0.735 seconds

18/05/24 16:00:57 INFO hive.HiveImport: Hive import complete.

18/05/24 16:00:57 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

[root@node1 sqoop-1.4.7]#hive> use test;

OK

Time taken: 0.03 seconds

hive> show tables;

OK

files

logs

Time taken: 0.032 seconds, Fetched: 2 row(s)

hive> select count(*) from files;

Query ID = root_20180524160128_5de1a451-e3b8-4b18-8311-653a22244470

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1526097883376_0021, Tracking URL = http://bigdata03-test:8088/proxy/application_1526097883376_0021/

Kill Command = /opt/hadoop-2.7.5/bin/hadoop job -kill job_1526097883376_0021

Hadoop job information for Stage-1: number of mappers: 3; number of reducers: 1

2018-05-24 16:01:35,411 Stage-1 map = 0%, reduce = 0%

2018-05-24 16:01:41,652 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.71 sec

2018-05-24 16:01:48,945 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.75 sec

MapReduce Total cumulative CPU time: 8 seconds 750 msec

Ended Job = job_1526097883376_0021

MapReduce Jobs Launched:

Stage-Stage-1: Map: 3 Reduce: 1 Cumulative CPU: 8.75 sec HDFS Read: 3816501 HDFS Write: 5 SUCCESS

Total MapReduce CPU Time Spent: 8 seconds 750 msec

OK

3244

Time taken: 21.33 seconds, Fetched: 1 row(s)

hive>

Углубленный анализ переполнения памяти CUDA: OutOfMemoryError: CUDA не хватает памяти. Попыталась выделить 3,21 Ги Б (GPU 0; всего 8,00 Ги Б).

[Решено] ошибка установки conda. Среда решения: не удалось выполнить первоначальное зависание. Повторная попытка с помощью файла (графическое руководство).

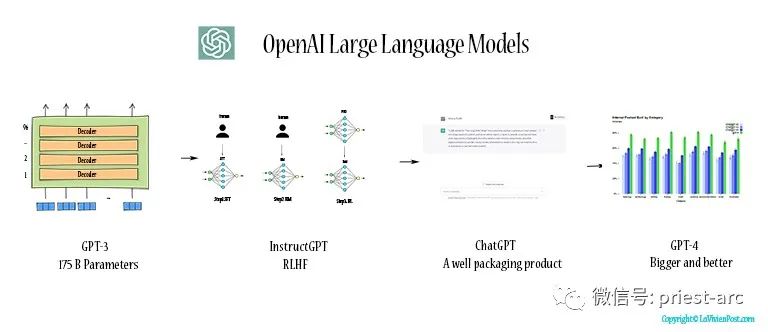

Прочитайте нейросетевую модель Трансформера в одной статье

.ART Теплые зимние предложения уже открыты

Сравнительная таблица описания кодов ошибок Amap

Уведомление о последних правилах Points Mall в декабре 2022 года.

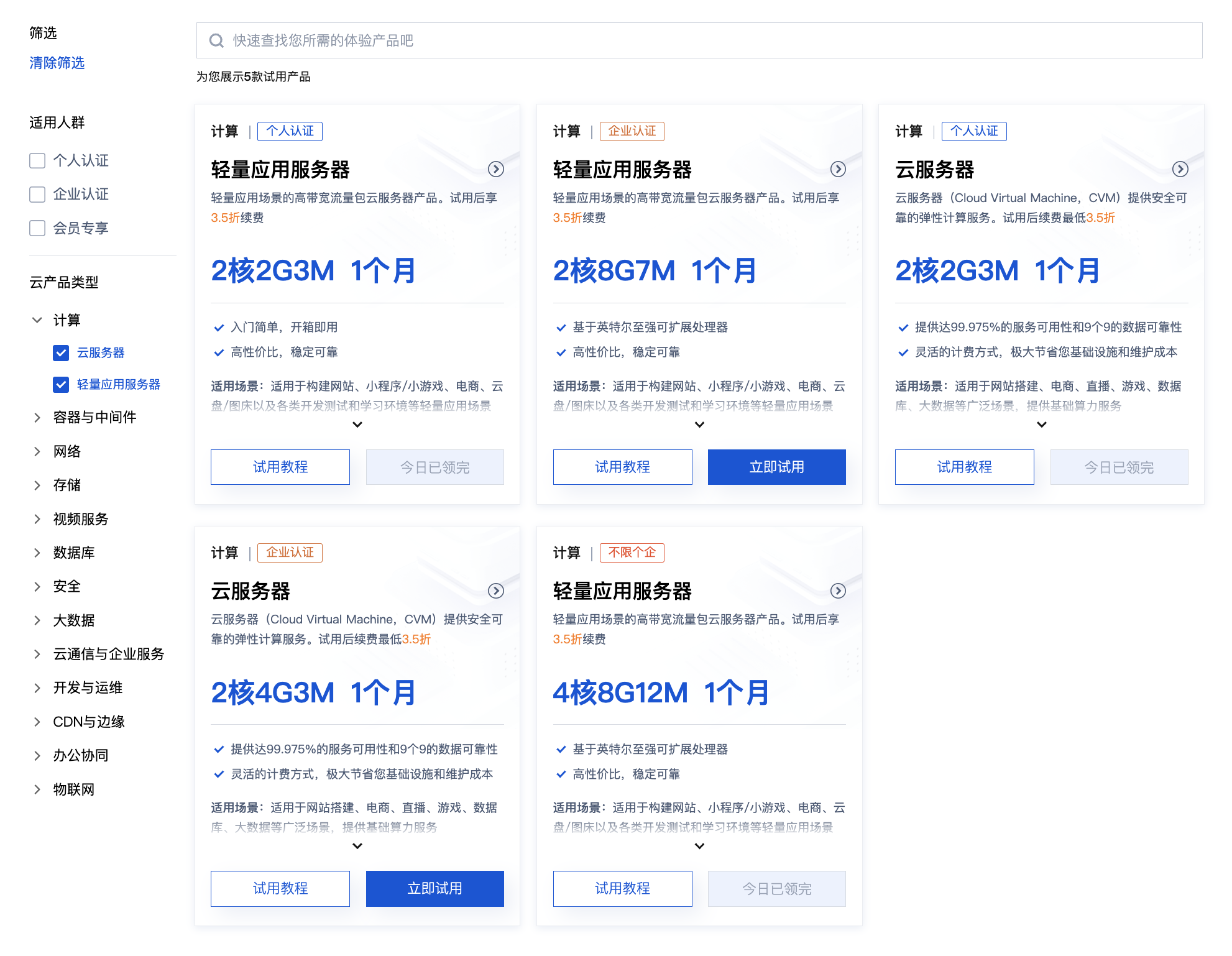

Даже новички могут быстро приступить к работе с легким сервером приложений.

Взгляд на RSAC 2024|Защита конфиденциальности в эпоху больших моделей

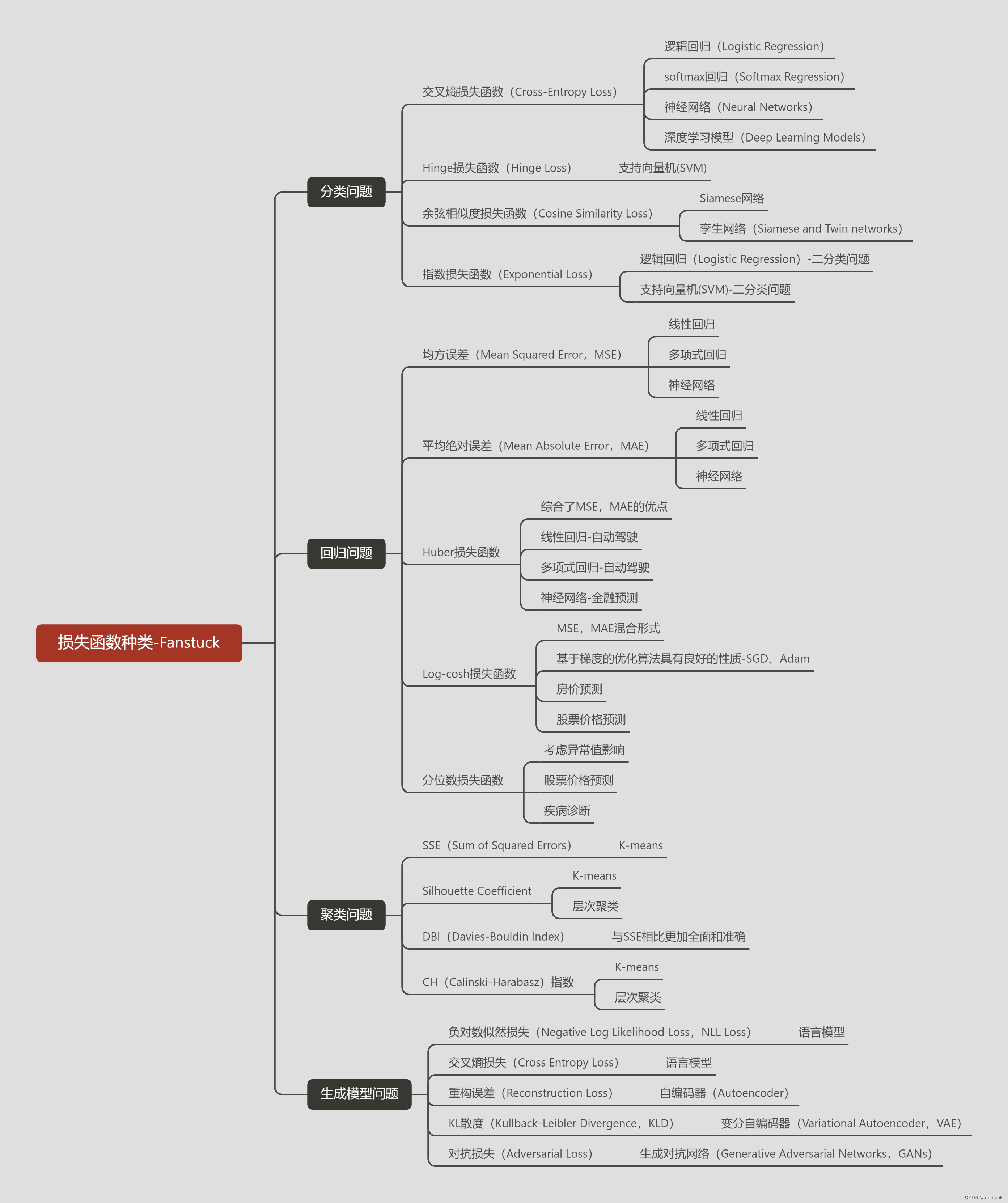

Вы используете ИИ каждый день и до сих пор не знаете, как ИИ дает обратную связь? Одна статья для понимания реализации в коде Python общих функций потерь генеративных моделей + анализ принципов расчета.

Используйте (внутренний) почтовый ящик для образовательных учреждений, чтобы использовать Microsoft Family Bucket (1T дискового пространства на одном диске и версию Office 365 для образовательных учреждений)

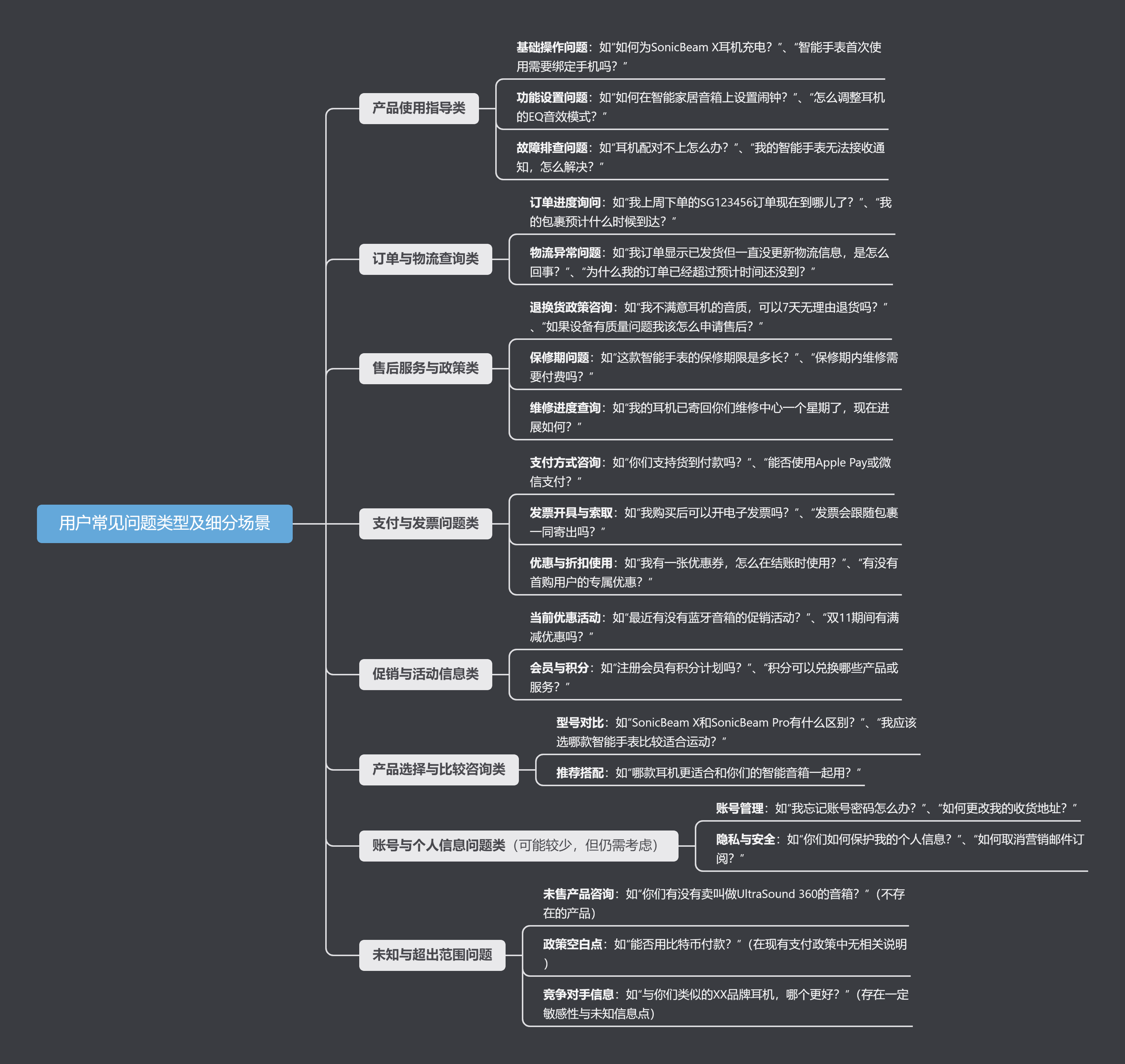

Руководство по началу работы с оперативным проектом (7) Практическое сочетание оперативного письма — оперативного письма на основе интеллектуальной системы вопросов и ответов службы поддержки клиентов

[docker] Версия сервера «Чтение 3» — создайте свою собственную программу чтения веб-текста

Обзор Cloud-init и этапы создания в рамках PVE

Корпоративные пользователи используют пакет регистрационных ресурсов для регистрации ICP для веб-сайта и активации оплаты WeChat H5 (с кодом платежного узла версии API V3)

Подробное объяснение таких показателей производительности с высоким уровнем параллелизма, как QPS, TPS, RT и пропускная способность.

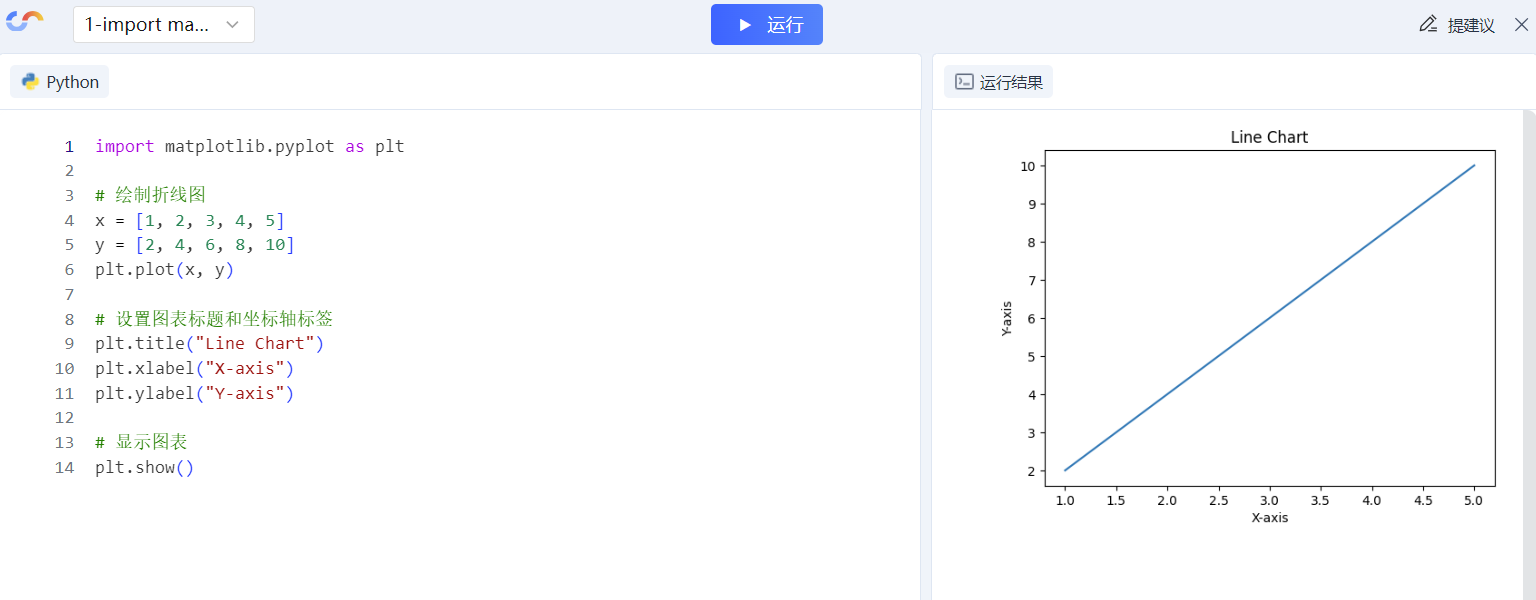

Удачи в конкурсе Python Essay Challenge, станьте первым, кто испытает новую функцию сообщества [Запускать блоки кода онлайн] и выиграйте множество изысканных подарков!

[Техническая посадка травы] Кровавая рвота и отделка позволяют вам необычным образом ощипывать гусиные перья! Не распространяйте информацию! ! !

[Официальное ограниченное по времени мероприятие] Сейчас ноябрь, напишите и получите приз

Прочтите это в одной статье: Учебник для няни по созданию сервера Huanshou Parlu на базе CVM-сервера.

Cloud Native | Что такое CRD (настраиваемые определения ресурсов) в K8s?

Как использовать Cloudflare CDN для настройки узла (CF самостоятельно выбирает IP) Гонконг, Китай/Азия узел/сводка и рекомендации внутреннего высокоскоростного IP-сегмента

Дополнительные правила вознаграждения амбассадоров акции в марте 2023 г.

Можно ли открыть частный сервер Phantom Beast Palu одним щелчком мыши? Супер простой урок для начинающих! (Прилагается метод обновления сервера)

[Играйте с Phantom Beast Palu] Обновите игровой сервер Phantom Beast Pallu одним щелчком мыши

Maotouhu делится: последний доступный внутри страны адрес склада исходного образа Docker 2024 года (обновлено 1 декабря)

Кодирование Base64 в MultipartFile

5 точек расширения SpringBoot, супер практично!

Глубокое понимание сопоставления индексов Elasticsearch.