Система камер | Анализ вариантов использования HFR

1. Знакомство с записью видео с высокой частотой кадров.

Видео с высокой частотой кадров — это замедленная съемка. Как правило, наилучшая частота кадров видео, приемлемая для человеческого глаза, составляет 24 кадра в секунду. Если снять действие со скоростью 120 кадров в секунду и воспроизвести его с частотой 24 кадра в секунду, видео замедлится в 5 раз.

Функция замедленного движения платформы Qualcomm:

- Высокоскоростная запись (HSR) : Захватывайте, кодируйте и сохраняйте с высокой частотой кадров (скорость выполнения) fps (целевая скорость), скорость бега равна целевой скорости.

- Запись с высокой частотой кадров (HFR): Захватывайте, кодируйте и сохраняйте с высокой частотой кадров в секунду (скорость работы) до 30. fps (целевая скорость), скорость бега превышает целевую скорость.

2. Анализ потока кода

1. Приложение начинает запись.

Путь: \packages\apps\SnapdragonCamera\src\com\android\camera\CaptureModule.java

private boolean startRecordingVideo(final int cameraId) {

...

if (ApiHelper.isAndroidPOrHigher()) {

if (mHighSpeedCapture && ((int) mHighSpeedFPSRange.getUpper() > NORMAL_SESSION_MAX_FPS)) {

CaptureRequest initialRequest = mVideoRequestBuilder.build();

buildConstrainedCameraSession(mCameraDevice[cameraId], surfaces,

mSessionListener, mCameraHandler, initialRequest);

} else {

configureCameraSessionWithParameters(cameraId, surfaces,

mSessionListener, mCameraHandler, mVideoRequestBuilder.build());

}

} else {

//hfr включен и максимальная частота кадров превышает NORMAL_SESSION_MAX_FPS (60 кадров в секунду)

// Создан createConstrainedHighSpeedCaptureSession

//Иначе это createCaptureSession

if (mHighSpeedCapture && ((int) mHighSpeedFPSRange.getUpper() > NORMAL_SESSION_MAX_FPS)) { //Создаем высокоскоростной поток

mCameraDevice[cameraId].createConstrainedHighSpeedCaptureSession(surfaces, new

CameraConstrainedHighSpeedCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession cameraCaptureSession) {

mCurrentSession = cameraCaptureSession;

Log.v(TAG, "createConstrainedHighSpeedCaptureSession onConfigured");

mCaptureSession[cameraId] = cameraCaptureSession;

CameraConstrainedHighSpeedCaptureSession session =

(CameraConstrainedHighSpeedCaptureSession) mCurrentSession; try { setUpVideoCaptureRequestBuilder(mVideoRequestBuilder, cameraId); List list = CameraUtil .createHighSpeedRequestList(mVideoRequestBuilder.build()); // Отправьте несколько приложений-запросов одновременно через setRepeatingBurst. Соответствующий собственный метод — submitRequestList. session.setRepeatingBurst(list, mCaptureCallback, mCameraHandler); } catch (CameraAccessException e) { Log.e(TAG, "Failed to start high speed video recording " + e.getMessage()); e.printStackTrace(); } catch (IllegalArgumentException e) { Log.e(TAG, "Failed to start high speed video recording " + e.getMessage()); e.printStackTrace(); } catch (IllegalStateException e) { Log.e(TAG, "Failed to start high speed video recording " + e.getMessage()); e.printStackTrace(); } if (!mFrameProcessor.isFrameListnerEnabled() && !startMediaRecorder()) { startRecordingFailed(); return; }

}, null);

} else {

surfaces.add(mVideoSnapshotImageReader.getSurface());

String zzHDR = mSettingsManager.getValue(SettingsManager.KEY_VIDEO_HDR_VALUE);

boolean zzHdrStatue = zzHDR.equals("1");

// if enable ZZHDR mode, don`t call the setOpModeForVideoStream method.

if (!zzHdrStatue) {

setOpModeForVideoStream(cameraId);

}

String value = mSettingsManager.getValue(SettingsManager.KEY_FOVC_VALUE);

if (value != null && Boolean.parseBoolean(value)) {

mStreamConfigOptMode = mStreamConfigOptMode | STREAM_CONFIG_MODE_FOVC;

}

if (zzHdrStatue) {

mStreamConfigOptMode = STREAM_CONFIG_MODE_ZZHDR;

}

if (DEBUG) {

Log.v(TAG, "createCustomCaptureSession mStreamConfigOptMode :"

+ mStreamConfigOptMode);

}

if (mStreamConfigOptMode == 0) { //Обычный поток,Но процесс устанавливает setOpModeForVideoStream,приведет кconfig->operation_modeизменять。

mCameraDevice[cameraId].createCaptureSession(surfaces, mCCSSateCallback, null);

} else {

List<OutputConfiguration> outConfigurations = new ArrayList<>(surfaces.size());

for (Surface sface : surfaces) {

outConfigurations.add(new OutputConfiguration(sface));

}

mCameraDevice[cameraId].createCustomCaptureSession(null, outConfigurations,

mStreamConfigOptMode, mCCSSateCallback, null);

}

}

}

}

...2. Порядок настройки HFR

Файл:\frameworks\base\core\java\android\hardware\camera2\CameraDevice.java

//Создаем высокоскоростной интерфейс сеанса захвата

public abstract void createConstrainedHighSpeedCaptureSession(@NonNull List<Surface> outputs,

@NonNull CameraCaptureSession.StateCallback callback,

@Nullable Handler handler)

throws CameraAccessException;Конкретная реализация теперь:\frameworks\base\core\java\android\hardware\camera2\impl\CameraDeviceImpl.java

@Override

public void createConstrainedHighSpeedCaptureSession(List<Surface> outputs,

android.hardware.camera2.CameraCaptureSession.StateCallback callback, Handler handler)

throws CameraAccessException {

if (outputs == null || outputs.size() == 0 || outputs.size() > 2) {

throw new IllegalArgumentException(

"Output surface list must not be null and the size must be no more than 2");

}

List<OutputConfiguration> outConfigurations = new ArrayList<>(outputs.size());

for (Surface surface : outputs) {

outConfigurations.add(new OutputConfiguration(surface));

}

createCaptureSessionInternal(null, outConfigurations, callback,

checkAndWrapHandler(handler),

/*operatingMode*/ICameraDeviceUser.CONSTRAINED_HIGH_SPEED_MODE,

/*sessionParams*/ null);

}Функция createCaptureSessionInternal реализована следующим образом:

private void createCaptureSessionInternal(InputConfiguration inputConfig,

List<OutputConfiguration> outputConfigurations,

CameraCaptureSession.StateCallback callback, Executor executor,

int operatingMode, CaptureRequest sessionParams) throws CameraAccessException {

synchronized(mInterfaceLock) {

if (DEBUG) {

Log.d(TAG, "createCaptureSessionInternal");

}

checkIfCameraClosedOrInError();

boolean isConstrainedHighSpeed =

(operatingMode == ICameraDeviceUser.CONSTRAINED_HIGH_SPEED_MODE);

if (isConstrainedHighSpeed && inputConfig != null) {

throw new IllegalArgumentException("Constrained high speed session doesn't support"

+ " input configuration yet.");

}

// Notify current session that it's going away, before starting camera operations

// After this call completes, the session is not allowed to call into CameraDeviceImpl

if (mCurrentSession != null) {

mCurrentSession.replaceSessionClose();

}

// TODO: dont block for this

boolean configureSuccess = true;

CameraAccessException pendingException = null;

Surface input = null;

try {

// configure streams and then block until IDLE

// Свойства устройства будут получены внутри

configureSuccess = configureStreamsChecked(inputConfig, outputConfigurations,

operatingMode, sessionParams);

if (configureSuccess == true && inputConfig != null) {

input = mRemoteDevice.getInputSurface();

}

} catch (CameraAccessException e) {

configureSuccess = false;

pendingException = e;

input = null;

if (DEBUG) {

Log.v(TAG, "createCaptureSession - failed with exception ", e);

}

}

// Fire onConfigured if configureOutputs succeeded, fire onConfigureFailed otherwise.

CameraCaptureSessionCore newSession = null;

if (isConstrainedHighSpeed) {

ArrayList<Surface> surfaces = new ArrayList<>(outputConfigurations.size());

for (OutputConfiguration outConfig : outputConfigurations) {

surfaces.add(outConfig.getSurface());

}

StreamConfigurationMap config =

getCharacteristics().get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

// Проверьте, правильный ли формат, находится ли частота кадров в допустимом диапазоне, является ли это потоком предварительного просмотра/кодирования видео и т. д.

SurfaceUtils.checkConstrainedHighSpeedSurfaces(surfaces, /*fpsRange*/null, config);

newSession = new CameraConstrainedHighSpeedCaptureSessionImpl(mNextSessionId++,

callback, executor, this, mDeviceExecutor, configureSuccess,

mCharacteristics);

} else {

newSession = new CameraCaptureSessionImpl(mNextSessionId++, input,

callback, executor, this, mDeviceExecutor, configureSuccess);

}

// TODO: wait until current session closes, then create the new session

mCurrentSession = newSession;

if (pendingException != null) {

throw pendingException;

}

mSessionStateCallback = mCurrentSession.getDeviceStateCallback();

}

}Продолжить анализconfigureStreamsChecked():

public boolean configureStreamsChecked(InputConfiguration inputConfig,

List<OutputConfiguration> outputs, int operatingMode, CaptureRequest sessionParams)

throws CameraAccessException {

// Treat a null input the same an empty list

if (outputs == null) {

outputs = new ArrayList<OutputConfiguration>();

}

if (outputs.size() == 0 && inputConfig != null) {

throw new IllegalArgumentException("cannot configure an input stream without " +

"any output streams");

}

checkInputConfiguration(inputConfig);

boolean success = false;

synchronized(mInterfaceLock) {

checkIfCameraClosedOrInError();

// Streams to create

HashSet<OutputConfiguration> addSet = new HashSet<OutputConfiguration>(outputs);

// Streams to delete

List<Integer> deleteList = new ArrayList<Integer>();

// Determine which streams need to be created, which to be deleted

for (int i = 0; i < mConfiguredOutputs.size(); ++i) {

int streamId = mConfiguredOutputs.keyAt(i);

OutputConfiguration outConfig = mConfiguredOutputs.valueAt(i);

if (!outputs.contains(outConfig) || outConfig.isDeferredConfiguration()) {

// Always delete the deferred output configuration when the session

// is created, as the deferred output configuration doesn't have unique surface

// related identifies.

deleteList.add(streamId);

} else {

addSet.remove(outConfig); // Don't create a stream previously created

}

}

mDeviceExecutor.execute(mCallOnBusy);

stopRepeating();

try {

waitUntilIdle();

// Начать настройку

mRemoteDevice.beginConfigure();

// reconfigure the input stream if the input configuration is different.

InputConfiguration currentInputConfig = mConfiguredInput.getValue();

if (inputConfig != currentInputConfig &&

(inputConfig == null || !inputConfig.equals(currentInputConfig))) {

if (currentInputConfig != null) {

mRemoteDevice.deleteStream(mConfiguredInput.getKey());

mConfiguredInput = new SimpleEntry<Integer, InputConfiguration>(

REQUEST_ID_NONE, null);

}

if (inputConfig != null) {

int streamId = mRemoteDevice.createInputStream(inputConfig.getWidth(),

inputConfig.getHeight(), inputConfig.getFormat());

mConfiguredInput = new SimpleEntry<Integer, InputConfiguration>(

streamId, inputConfig);

}

}

// Delete all streams first (to free up HW resources)

for (Integer streamId : deleteList) {

mRemoteDevice.deleteStream(streamId);

mConfiguredOutputs.delete(streamId);

}

// Add all new streams

for (OutputConfiguration outConfig : outputs) {

if (addSet.contains(outConfig)) {

int streamId = mRemoteDevice.createStream(outConfig);

mConfiguredOutputs.put(streamId, outConfig);

}

}

//customOpMode Можно изменить с помощью setOpModeForVideoStream.

//CameraConstrainedHighSpeedCaptureSessionImpl не меняет это значение

operatingMode = (operatingMode | (customOpMode << 16));

//Заканчивать Настроить поток

//Тип mRemoteDevice — ICameraDeviceUserWrapper

//Получается при открытии камеры.

if (sessionParams != null) {

mRemoteDevice.endConfigure(operatingMode, sessionParams.getNativeCopy());

} else {

mRemoteDevice.endConfigure(operatingMode, null);

}

success = true;

} catch (IllegalArgumentException e) {

// OK. camera service can reject stream config if it's not supported by HAL

// This is only the result of a programmer misusing the camera2 api.

Log.w(TAG, "Stream configuration failed due to: " + e.getMessage());

return false;

} catch (CameraAccessException e) {

if (e.getReason() == CameraAccessException.CAMERA_IN_USE) {

throw new IllegalStateException("The camera is currently busy." +

" You must wait until the previous operation completes.", e);

}

throw e;

} finally {

if (success && outputs.size() > 0) {

mDeviceExecutor.execute(mCallOnIdle);

} else {

// Always return to the 'unconfigured' state if we didn't hit a fatal error

mDeviceExecutor.execute(mCallOnUnconfigured);

}

}

}

return success;

}Вышеуказанный объект mRemoteDevice имеет тип ICameraDeviceUserWrapper и получается при открытии камеры. Код выглядит следующим образом:

\frameworks\base\core\java\android\hardware\camera2\CameraManager.java

private CameraDevice openCameraDeviceUserAsync(String cameraId,

CameraDevice.StateCallback callback, Executor executor, final int uid)

throws CameraAccessException {

CameraCharacteristics characteristics = getCameraCharacteristics(cameraId);

CameraDevice device = null;

synchronized (mLock) {

ICameraDeviceUser cameraUser = null;

//Создаем объект CameraDeviceImpl

android.hardware.camera2.impl.CameraDeviceImpl deviceImpl =

new android.hardware.camera2.impl.CameraDeviceImpl(

cameraId,

callback,

executor,

characteristics,

mContext.getApplicationInfo().targetSdkVersion);

ICameraDeviceCallbacks callbacks = deviceImpl.getCallbacks();

try {

if (supportsCamera2ApiLocked(cameraId)) {

// Use cameraservice's cameradeviceclient implementation for HAL3.2+ devices

//Получаем прокси-объект cameraService

ICameraService cameraService = CameraManagerGlobal.get().getCameraService();

if (cameraService == null) {

throw new ServiceSpecificException(

ICameraService.ERROR_DISCONNECTED,

"Camera service is currently unavailable");

}

//Открываем камеру через прокси-объект cameraService и получаем ICameraDeviceUser объект cameraUser

cameraUser = cameraService.connectDevice(callbacks, cameraId,

mContext.getOpPackageName(), uid);

} else {

// Use legacy camera implementation for HAL1 devices

int id;

try {

id = Integer.parseInt(cameraId);

} catch (NumberFormatException e) {

throw new IllegalArgumentException("Expected cameraId to be numeric, but it was: "

+ cameraId);

}

Log.i(TAG, "Using legacy camera HAL.");

cameraUser = CameraDeviceUserShim.connectBinderShim(callbacks, id);

}

} catch (ServiceSpecificException e) {

if (e.errorCode == ICameraService.ERROR_DEPRECATED_HAL) {

throw new AssertionError("Should've gone down the shim path");

} else if (e.errorCode == ICameraService.ERROR_CAMERA_IN_USE ||

e.errorCode == ICameraService.ERROR_MAX_CAMERAS_IN_USE ||

e.errorCode == ICameraService.ERROR_DISABLED ||

e.errorCode == ICameraService.ERROR_DISCONNECTED ||

e.errorCode == ICameraService.ERROR_INVALID_OPERATION) {

// Received one of the known connection errors

// The remote camera device cannot be connected to, so

// set the local camera to the startup error state

deviceImpl.setRemoteFailure(e);

if (e.errorCode == ICameraService.ERROR_DISABLED ||

e.errorCode == ICameraService.ERROR_DISCONNECTED ||

e.errorCode == ICameraService.ERROR_CAMERA_IN_USE) {

// Per API docs, these failures call onError and throw

throwAsPublicException(e);

}

} else {

// Unexpected failure - rethrow

throwAsPublicException(e);

}

} catch (RemoteException e) {

// Camera service died - act as if it's a CAMERA_DISCONNECTED case

ServiceSpecificException sse = new ServiceSpecificException(

ICameraService.ERROR_DISCONNECTED,

"Camera service is currently unavailable");

deviceImpl.setRemoteFailure(sse);

throwAsPublicException(sse);

}

// TODO: factor out callback to be non-nested, then move setter to constructor

// For now, calling setRemoteDevice will fire initial

// onOpened/onUnconfigured callbacks.

// This function call may post onDisconnected and throw CAMERA_DISCONNECTED if

// cameraUser dies during setup.

//Откроем камеру, чтобы получить объект cameraUser имеет значение CameraDeviceImpl. объект deviceImpl

deviceImpl.setRemoteDevice(cameraUser);

device = deviceImpl;

}

//Возвращаем объект CameraDeviceImpl deviceImpl

return device;

}Предыдущий анализ configureStreamsChecked показывает, что он в основном разделен на три этапа:

- mRemoteDevice.beginConfigure();//Начало настройки

- mRemoteDevice.deleteStream(streamId) и mRemoteDevice.createStream(outConfig)//Создание и удаление потоков

- mRemoteDevice.endConfigure(operatingMode);//Завершение настройки, первые два шага — подготовка, этот шаг — реальный процесс настройки

В основном анализируем endConfigure: \frameworks\base\core\java\android\hardware\camera2\impl\ICameraDeviceUserWrapper.java

public void endConfigure(int operatingMode, CameraMetadataNative sessionParams)

throws CameraAccessException {

try {

// от Биндера IPC Фактический интерфейс вызова реализован в CameraDeviceClient.cpp

mRemoteDevice.endConfigure(operatingMode, (sessionParams == null) ?

new CameraMetadataNative() : sessionParams);

} catch (Throwable t) {

CameraManager.throwAsPublicException(t);

throw new UnsupportedOperationException("Unexpected exception", t);

}

}Файл \frameworks\av\services\camera\libcameraservice\api2\CameraDeviceClient.cpp

binder::Status CameraDeviceClient::endConfigure(int operatingMode,

const hardware::camera2::impl::CameraMetadataNative& sessionParams) {

ATRACE_CALL();

ALOGV("%s: ending configure (%d input stream, %zu output surfaces)",

__FUNCTION__, mInputStream.configured ? 1 : 0,

mStreamMap.size());

...... // Sanitize the high speed session against necessary capability bit.

bool isConstrainedHighSpeed = (operatingMode == ICameraDeviceUser::CONSTRAINED_HIGH_SPEED_MODE);

// Проверьте, поддерживается ли CONSTRAINED_HIGH_SPEED_MODE

if (isConstrainedHighSpeed) {

CameraMetadata staticInfo = mDevice->info();

camera_metadata_entry_t entry = staticInfo.find(ANDROID_REQUEST_AVAILABLE_CAPABILITIES);

bool isConstrainedHighSpeedSupported = false;

for(size_t i = 0; i < entry.count; ++i) {

uint8_t capability = entry.data.u8[i];

if (capability == ANDROID_REQUEST_AVAILABLE_CAPABILITIES_CONSTRAINED_HIGH_SPEED_VIDEO) {

isConstrainedHighSpeedSupported = true;

break;

}

}

if (!isConstrainedHighSpeedSupported) {

String8 msg = String8::format(

"Camera %s: Try to create a constrained high speed configuration on a device"

" that doesn't support it.", mCameraIdStr.string());

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(CameraService::ERROR_ILLEGAL_ARGUMENT,

msg.string());

}

}

//Запускаем после прохождения проверки поток

status_t err = mDevice->configureStreams(sessionParams, operatingMode);

if (err == BAD_VALUE) {

String8 msg = String8::format("Camera %s: Unsupported set of inputs/outputs provided",

mCameraIdStr.string());

ALOGE("%s: %s", __FUNCTION__, msg.string());

res = STATUS_ERROR(CameraService::ERROR_ILLEGAL_ARGUMENT, msg.string());

} else if (err != OK) {

String8 msg = String8::format("Camera %s: Error configuring streams: %s (%d)",

mCameraIdStr.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

res = STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION, msg.string());

}

return res;

}затем Входитьприезжать:\frameworks\av\services\camera\libcameraservice\device3\Camera3Device.cpp

status_t Camera3Device::configureStreams(const CameraMetadata& sessionParams, int operatingMode) {

ATRACE_CALL();

ALOGV("%s: E", __FUNCTION__);

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

// In case the client doesn't include any session parameter, try a

// speculative configuration using the values from the last cached

// default request.

if (sessionParams.isEmpty() &&

((mLastTemplateId > 0) && (mLastTemplateId < CAMERA3_TEMPLATE_COUNT)) &&

(!mRequestTemplateCache[mLastTemplateId].isEmpty())) {

ALOGV("%s: Speculative session param configuration with template id: %d", __func__,

mLastTemplateId);

return filterParamsAndConfigureLocked(mRequestTemplateCache[mLastTemplateId],

operatingMode);

}

return filterParamsAndConfigureLocked(sessionParams, operatingMode);

}Затем вызовите следующую функцию:

status_t Camera3Device::configureStreamsLocked(int operatingMode,

const CameraMetadata& sessionParams, bool notifyRequestThread) {

ATRACE_CALL();

status_t res;

if (mStatus != STATUS_UNCONFIGURED && mStatus != STATUS_CONFIGURED) {

CLOGE("Not idle");

return INVALID_OPERATION;

}

if (operatingMode < 0) {

CLOGE("Invalid operating mode: %d", operatingMode);

return BAD_VALUE;

}

// Проверьте, включен ли режим isConstrainedHighSpeed

bool isConstrainedHighSpeed =

static_cast<int>(StreamConfigurationMode::CONSTRAINED_HIGH_SPEED_MODE) ==

operatingMode;

if (mOperatingMode != operatingMode) {

mNeedConfig = true;

mIsConstrainedHighSpeedConfiguration = isConstrainedHighSpeed;

mOperatingMode = operatingMode;

}

if (!mNeedConfig) {

ALOGV("%s: Skipping config, no stream changes", __FUNCTION__);

return OK;

}

// Workaround for device HALv3.2 or older spec bug - zero streams requires

// adding a dummy stream instead.

// TODO: Bug: 17321404 for fixing the HAL spec and removing this workaround.

if (mOutputStreams.size() == 0) {

addDummyStreamLocked();

} else {

tryRemoveDummyStreamLocked();

}

// Start configuring the streams

ALOGV("%s: Camera %s: Starting stream configuration", __FUNCTION__, mId.string());

mPreparerThread->pause();

camera3_stream_configuration config;

config.operation_mode = mOperatingMode; //Назначаем mOperatingMode для config.operation_mode

config.num_streams = (mInputStream != NULL) + mOutputStreams.size();

Vector<camera3_stream_t*> streams;

streams.setCapacity(config.num_streams);

std::vector<uint32_t> bufferSizes(config.num_streams, 0);

if (mInputStream != NULL) {

camera3_stream_t *inputStream;

inputStream = mInputStream->startConfiguration();

if (inputStream == NULL) {

CLOGE("Can't start input stream configuration");

cancelStreamsConfigurationLocked();

return INVALID_OPERATION;

}

streams.add(inputStream);

}

// Конфигурация выходного потока

for (size_t i = 0; i < mOutputStreams.size(); i++) {

// Don't configure bidi streams twice, nor add them twice to the list

if (mOutputStreams[i].get() ==

static_cast<Camera3StreamInterface*>(mInputStream.get())) {

config.num_streams--;

continue;

}

camera3_stream_t *outputStream;

outputStream = mOutputStreams.editValueAt(i)->startConfiguration();

if (outputStream == NULL) {

CLOGE("Can't start output stream configuration");

cancelStreamsConfigurationLocked();

return INVALID_OPERATION;

}

streams.add(outputStream);

if (outputStream->format == HAL_PIXEL_FORMAT_BLOB &&

outputStream->data_space == HAL_DATASPACE_V0_JFIF) {

size_t k = i + ((mInputStream != nullptr) ? 1 : 0); // Input stream if present should

// always occupy the initial entry.

bufferSizes[k] = static_cast<uint32_t>(

getJpegBufferSize(outputStream->width, outputStream->height));

}

}

config.streams = streams.editArray();

// Do the HAL configuration; will potentially touch stream

// max_buffers, usage, priv fields.

const camera_metadata_t *sessionBuffer = sessionParams.getAndLock();

//Уведомить слой HAL поток

res = mInterface->configureStreams(sessionBuffer, &config, bufferSizes);

sessionParams.unlock(sessionBuffer);

...... return OK;

}Наконец, вызовите интерфейс HIDL: \frameworks\av\services\camera\libcameraservice\device3\Camera3Device.cpp.

status_t Camera3Device::HalInterface::configureStreams(const camera_metadata_t *sessionParams,

camera3_stream_configuration *config, const std::vector<uint32_t>& bufferSizes) {

ATRACE_NAME("CameraHal::configureStreams");

if (!valid()) return INVALID_OPERATION;

status_t res = OK; ...... // See if we have v3.4 or v3.3 HAL

if (mHidlSession_3_4 != nullptr) {

// We do; use v3.4 for the call

ALOGV("%s: v3.4 device found", __FUNCTION__);

device::V3_4::HalStreamConfiguration finalConfiguration3_4;

auto err = mHidlSession_3_4->configureStreams_3_4(requestedConfiguration3_4,

[&status, &finalConfiguration3_4]

(common::V1_0::Status s, const device::V3_4::HalStreamConfiguration& halConfiguration) {

finalConfiguration3_4 = halConfiguration;

status = s;

});

if (!err.isOk()) {

ALOGE("%s: Transaction error: %s", __FUNCTION__, err.description().c_str());

return DEAD_OBJECT;

}

finalConfiguration.streams.resize(finalConfiguration3_4.streams.size());

for (size_t i = 0; i < finalConfiguration3_4.streams.size(); i++) {

finalConfiguration.streams[i] = finalConfiguration3_4.streams[i].v3_3;

}

} else if (mHidlSession_3_3 != nullptr) {

// We do; use v3.3 for the call

ALOGV("%s: v3.3 device found", __FUNCTION__);

auto err = mHidlSession_3_3->configureStreams_3_3(requestedConfiguration3_2,

[&status, &finalConfiguration]

(common::V1_0::Status s, const device::V3_3::HalStreamConfiguration& halConfiguration) {

finalConfiguration = halConfiguration;

status = s;

});

if (!err.isOk()) {

ALOGE("%s: Transaction error: %s", __FUNCTION__, err.description().c_str());

return DEAD_OBJECT;

}

} else {

// We don't; use v3.2 call and construct a v3.3 HalStreamConfiguration

ALOGV("%s: v3.2 device found", __FUNCTION__);

HalStreamConfiguration finalConfiguration_3_2;

auto err = mHidlSession->configureStreams(requestedConfiguration3_2,

[&status, &finalConfiguration_3_2]

(common::V1_0::Status s, const HalStreamConfiguration& halConfiguration) {

finalConfiguration_3_2 = halConfiguration;

status = s;

});

if (!err.isOk()) {

ALOGE("%s: Transaction error: %s", __FUNCTION__, err.description().c_str());

return DEAD_OBJECT;

}

finalConfiguration.streams.resize(finalConfiguration_3_2.streams.size());

for (size_t i = 0; i < finalConfiguration_3_2.streams.size(); i++) {

finalConfiguration.streams[i].v3_2 = finalConfiguration_3_2.streams[i];

finalConfiguration.streams[i].overrideDataSpace =

requestedConfiguration3_2.streams[i].dataSpace;

}

}

...... return res;

}Каждая версия интерфейса HIDL находится в папке: \hardware\interfaces\camera\device\.

Часть кода уровня HAL выглядит следующим образом: \vendor\qcom\proprietary\camx\src\core\hal\camxhal3.cpp.

static int configure_streams(

const struct camera3_device* pCamera3DeviceAPI,

camera3_stream_configuration_t* pStreamConfigsAPI)

{

CAMX_ENTRYEXIT_SCOPE(CamxLogGroupHAL, SCOPEEventHAL3ConfigureStreams);

......

Camera3StreamConfig* pStreamConfigs = reinterpret_cast<Camera3StreamConfig*>(pStreamConfigsAPI);

result = pHALDevice->ConfigureStreams(pStreamConfigs);

if ((CamxResultSuccess != result) && (CamxResultEInvalidArg != result))

{

// HAL interface requires -ENODEV (EFailed) if a fatal error occurs

result = CamxResultEFailed;

}

if (CamxResultSuccess == result)

{

for (UINT32 stream = 0; stream < pStreamConfigsAPI->num_streams; stream++)

{

CAMX_ASSERT(NULL != pStreamConfigsAPI->streams[stream]);

if (NULL == pStreamConfigsAPI->streams[stream])

{

CAMX_LOG_ERROR(CamxLogGroupHAL, "Invalid argument 2 for configure_streams()");

// HAL interface requires -EINVAL (EInvalidArg) for invalid arguments

result = CamxResultEInvalidArg;

break;

}

else

{

CAMX_LOG_CONFIG(CamxLogGroupHAL, " FINAL stream[%d] = %p - info:", stream,

pStreamConfigsAPI->streams[stream]);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " format : %d, %s",

pStreamConfigsAPI->streams[stream]->format,

FormatToString(pStreamConfigsAPI->streams[stream]->format));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " width : %d",

pStreamConfigsAPI->streams[stream]->width);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " height : %d",

pStreamConfigsAPI->streams[stream]->height);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " stream_type : %08x, %s",

pStreamConfigsAPI->streams[stream]->stream_type,

StreamTypeToString(pStreamConfigsAPI->streams[stream]->stream_type));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " usage : %08x",

pStreamConfigsAPI->streams[stream]->usage);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " max_buffers : %d",

pStreamConfigsAPI->streams[stream]->max_buffers);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " rotation : %08x, %s",

pStreamConfigsAPI->streams[stream]->rotation,

RotationToString(pStreamConfigsAPI->streams[stream]->rotation));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " data_space : %08x, %s",

pStreamConfigsAPI->streams[stream]->data_space,

DataSpaceToString(pStreamConfigsAPI->streams[stream]->data_space));

CAMX_LOG_CONFIG(CamxLogGroupHAL, " priv : %p",

pStreamConfigsAPI->streams[stream]->priv);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " reserved[0] : %p",

pStreamConfigsAPI->streams[stream]->reserved[0]);

CAMX_LOG_CONFIG(CamxLogGroupHAL, " reserved[1] : %p",

pStreamConfigsAPI->streams[stream]->reserved[1]);

Camera3HalStream* pHalStream =

reinterpret_cast<Camera3HalStream*>(pStreamConfigsAPI->streams[stream]->reserved[0]);

if (pHalStream != NULL)

{

if (TRUE == HwEnvironment::GetInstance()->GetStaticSettings()->enableHALFormatOverride) //GetInstance() инициализирует текущее устройство и датчик capabilities

{

pStreamConfigsAPI->streams[stream]->format =

static_cast<HALPixelFormat>(pHalStream->overrideFormat);

}

CAMX_LOG_CONFIG(CamxLogGroupHAL,

" pHalStream: %p format : 0x%x, overrideFormat : 0x%x consumer usage: %llx, producer usage: %llx",

pHalStream, pStreamConfigsAPI->streams[stream]->format,

pHalStream->overrideFormat, pHalStream->consumerUsage, pHalStream->producerUsage);

}

}

}

}

......

return Utils::CamxResultToErrno(result);

}Реализация ConfigurationStreams находится в файле \vendor\qcom\proprietary\camx\src\core\hal\camxhaldevice.cpp.

CamxResult HALDevice::ConfigureStreams(

Camera3StreamConfig* pStreamConfigs)

{

CamxResult result = CamxResultSuccess;

// Validate the incoming stream configurations

result = CheckValidStreamConfig(pStreamConfigs);

...... if (CamxResultSuccess == result)

{

ClearFrameworkRequestBuffer();

m_numPipelines = 0;

if (TRUE == m_bCHIModuleInitialized)

{

GetCHIAppCallbacks()->chi_teardown_override_session(reinterpret_cast<camera3_device*>(&m_camera3Device), 0, NULL);

}

m_bCHIModuleInitialized = CHIModuleInitialize(pStreamConfigs); // Инициализируйте модуль Чи

......

}

return result;

}Функция обратного вызова, зарегистрированная слоем Chi, вызывается в CHIModuleInitialize:

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

// HALDevice::CHIModuleInitialize

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

BOOL HALDevice::CHIModuleInitialize(

Camera3StreamConfig* pStreamConfigs)

{

BOOL isOverrideEnabled = FALSE;

if (TRUE == HAL3Module::GetInstance()->IsCHIOverrideModulePresent())

{

/// @todo (CAMX-1518) Handle private data from Override module

VOID* pPrivateData;

chi_hal_callback_ops_t* pCHIAppCallbacks = GetCHIAppCallbacks();

// Вызов функции обратного вызова слоя Чи pCHIAppCallbacks->chi_initialize_override_session(GetCameraId(),

reinterpret_cast<const camera3_device_t*>(&m_camera3Device),

&m_HALCallbacks,

reinterpret_cast<camera3_stream_configuration_t*>(pStreamConfigs),

&isOverrideEnabled,

&pPrivateData);

}

return isOverrideEnabled;

}Функция обратного вызова уровня chi реализована в файле \vendor\qcom\proprietary\chi-cdk\vendor\chioverride\default\chxextensioninterface.cpp.

CDKResult ExtensionModule::InitializeOverrideSession(

uint32_t logicalCameraId,

const camera3_device_t* pCamera3Device,

const chi_hal_ops_t* chiHalOps,

camera3_stream_configuration_t* pStreamConfig,

int* pIsOverrideEnabled,

VOID** pPrivate)

{

CDKResult result = CDKResultSuccess;

UINT32 modeCount = 0;

ChiSensorModeInfo* pAllModes = NULL;

UINT32 fps = *m_pDefaultMaxFPS;

BOOL isVideoMode = FALSE;

uint32_t operation_mode;

static BOOL fovcModeCheck = EnableFOVCUseCase();

UsecaseId selectedUsecaseId = UsecaseId::NoMatch;

UINT minSessionFps = 0;

UINT maxSessionFps = 0;

......if ((isVideoMode == TRUE) && (operation_mode != 0))

{

UINT32 numSensorModes = m_logicalCameraInfo[logicalCameraId].m_cameraCaps.numSensorModes;

// Получить Информация о датчике, относящемся к HFR FrameRate, BatchedFrames и т. д.

CHISENSORMODEINFO* pAllSensorModes = m_logicalCameraInfo[logicalCameraId].pSensorModeInfo;

if ((operation_mode - 1) >= numSensorModes)

{

result = CDKResultEOverflow;

CHX_LOG_ERROR("operation_mode: %d, numSensorModes: %d", operation_mode, numSensorModes);

}

else

{

fps = pAllSensorModes[operation_mode - 1].frameRate;

}

}

if (CDKResultSuccess == result)

{

#if defined(CAMX_ANDROID_API) && (CAMX_ANDROID_API >= 28) //Android-P or better

camera_metadata_t *metadata = const_cast<camera_metadata_t*>(pStreamConfig->session_parameters);

camera_metadata_entry_t entry = { 0 };

entry.tag = ANDROID_CONTROL_AE_TARGET_FPS_RANGE;

// The client may choose to send NULL sesssion parameter, which is fine. For example, torch mode

// will have NULL session param.

if (metadata != NULL)

{

// Получите структуру записи соответствующего тега и сохраните данные в параметрах, переданных записью.

int ret = find_camera_metadata_entry(metadata, entry.tag, &entry);

if(ret == 0) {

minSessionFps = entry.data.i32[0];

maxSessionFps = entry.data.i32[1];

m_usecaseMaxFPS = maxSessionFps;

}

}

#endif

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfig->operation_mode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfig->operation_mode))

{

// Если это режим HFR, сделайте следующее:

// 1) Поиск и видео/предварительный просмотр HFRVideoSizes, соответствующие потоку.

// Примечание: предварительный просмотр и recording streams Размер должен быть одинаковым, иначе высокоскоростная камера Создание сеанса не удастся.

// 2) Если одинок Запись находится в SupportedHFRVideoSizes, и в этой записи мы выбираем размер пакета.

SearchNumBatchedFrames(logicalCameraId, pStreamConfig,

&m_usecaseNumBatchedFrames, &m_usecaseMaxFPS, maxSessionFps);

if (480 > m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR;

}

else

{

// For 480FPS or higher, require more aggresive power hint

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_HFR_480FPS;

}

}

else

{

// Not a HFR usecase, batch frames value need to be set to 1.

m_usecaseNumBatchedFrames = 1;

if (maxSessionFps == 0)

{

m_usecaseMaxFPS = fps;

}

if (TRUE == isVideoMode)

{

if (30 >= m_usecaseMaxFPS)

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE;

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_VIDEO_ENCODE_60FPS;

}

}

else

{

m_CurrentpowerHint = PERF_LOCK_POWER_HINT_PREVIEW;

}

}

if ((NULL != m_pPerfLockManager[logicalCameraId]) && (m_CurrentpowerHint != m_previousPowerHint))

{

m_pPerfLockManager[logicalCameraId]->ReleasePerfLock(m_previousPowerHint);

}

// Example [B == batch]: (240 FPS / 4 FPB = 60 BPS) / 30 FPS (Stats frequency goal) = 2 BPF i.e. skip every other stats

*m_pStatsSkipPattern = m_usecaseMaxFPS / m_usecaseNumBatchedFrames / 30;

if (*m_pStatsSkipPattern < 1)

{

*m_pStatsSkipPattern = 1;

}

m_VideoHDRMode = (StreamConfigModeVideoHdr == pStreamConfig->operation_mode);

m_torchWidgetUsecase = (StreamConfigModeQTITorchWidget == pStreamConfig->operation_mode);

// this check is introduced to avoid set *m_pEnableFOVC == 1 if fovcEnable is disabled in

// overridesettings & fovc bit is set in operation mode.

// as well as to avoid set,when we switch Usecases.

if (TRUE == fovcModeCheck)

{

*m_pEnableFOVC = ((pStreamConfig->operation_mode & StreamConfigModeQTIFOVC) == StreamConfigModeQTIFOVC) ? 1 : 0;

}

SetHALOps(chiHalOps, logicalCameraId);

m_logicalCameraInfo[logicalCameraId].m_pCamera3Device = pCamera3Device;

// Найдите подходящий вариант использования на основе CameraInfo.

selectedUsecaseId = m_pUsecaseSelector->GetMatchingUsecase(&m_logicalCameraInfo[logicalCameraId],

pStreamConfig);

CHX_LOG_CONFIG("Session_parameters FPS range %d:%d, BatchSize: %u FPS: %u SkipPattern: %u, "

"cameraId = %d selected use case = %d",

minSessionFps,

maxSessionFps,

m_usecaseNumBatchedFrames,

m_usecaseMaxFPS,

*m_pStatsSkipPattern,

logicalCameraId,

selectedUsecaseId);

// FastShutter mode supported only in ZSL usecase.

if ((pStreamConfig->operation_mode == StreamConfigModeFastShutter) &&

(UsecaseId::PreviewZSL != selectedUsecaseId))

{

pStreamConfig->operation_mode = StreamConfigModeNormal;

}

m_operationMode[logicalCameraId] = pStreamConfig->operation_mode;

}

if (UsecaseId::NoMatch != selectedUsecaseId)

{

// Создайте объект Usecase на основе UsecaseId. UsecaseId HFR используется по умолчанию.

m_pSelectedUsecase[logicalCameraId] =

m_pUsecaseFactory->CreateUsecaseObject(&m_logicalCameraInfo[logicalCameraId],

selectedUsecaseId, pStreamConfig); }

.....return result;

}Будет вызван AdvancedCameraUsecase::Create, который реализован в файлеvendor\qcom\proprietary\chi-cdk\vendor\chioverride\default\chxadvancedcamerausecase.cpp

AdvancedCameraUsecase* AdvancedCameraUsecase::Create(

LogicalCameraInfo* pCameraInfo, ///< Camera info

camera3_stream_configuration_t* pStreamConfig, ///< Stream configuration

UsecaseId usecaseId) ///< Identifier for usecase function

{

CDKResult result = CDKResultSuccess;

AdvancedCameraUsecase* pAdvancedCameraUsecase = CHX_NEW AdvancedCameraUsecase;

if ((NULL != pAdvancedCameraUsecase) && (NULL != pStreamConfig))

{

result = pAdvancedCameraUsecase->Initialize(pCameraInfo, pStreamConfig, usecaseId); //в Затем он вызовет CameraUsecaseBase::Initialize(m_pCallbacks), а затем вызовет CameraUsecaseBase::CreatePipeline

if (CDKResultSuccess != result)

{

pAdvancedCameraUsecase->Destroy(FALSE);

pAdvancedCameraUsecase = NULL;

}

}

else

{

result = CDKResultEFailed;

}

return pAdvancedCameraUsecase;

}На этом этапе уровень chi выбирает соответствующий UsecaseID и создает необходимый конвейер на основе параметров приложения, платформы, информации датчиков и XML-структуры топологии.

Ограничения при настройке потоков для HFR:

- Настройка высокоскоростной потоковой передачи через createConstrainedHighSpeedCaptureSession

- Можно настроить только один или два потока: один поток предварительного просмотра и один поток фотографий.

- Ограничение на использование потока предварительного просмотра — GRALLOC_USAGE_HW_TEXTURE |

- Ограничение на использование видеопотока — GRALLOC_USAGE_HW_VIDEO_ENCODER.

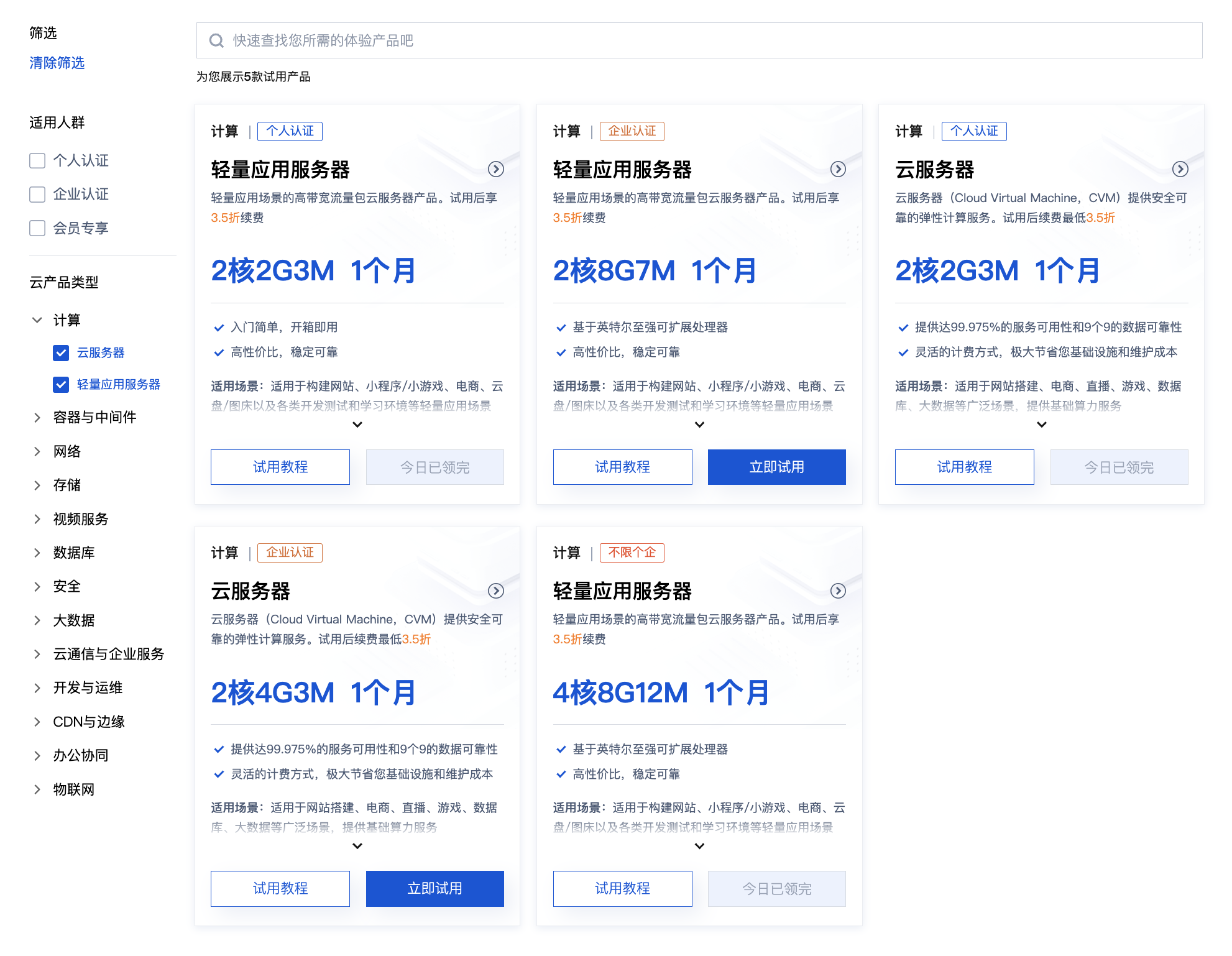

3. Как платформа Qualcomm получает возможности платформы и сенсора камеры?

Качество видео и соответствующую высокую частоту кадров видео можно выбрать в интерфейсе настроек камеры Snapdragon, а поддерживаемые элементы в списке выбора определяются на основе выходных возможностей платформы и датчика камеры при запуске службы камеры.

После выбора качества видео список опций HFR будет обновлен. Операция заключается в запросе FPS, поддерживаемого текущим разрешением. Процесс выглядит следующим образом:

1、APP

(1)\packages\apps\SnapdragonCamera\src\com\android\camera\SettingsManager.java

//Запрос поддерживаемого fps и обновление списка private void filterHFROptions() {

ListPreference hfrPref = mPreferenceGroup.findPreference(KEY_VIDEO_HIGH_FRAME_RATE);

if (hfrPref != null) {

hfrPref.reloadInitialEntriesAndEntryValues();

if (filterUnsupportedOptions(hfrPref,

getSupportedHighFrameRate())) {

mFilteredKeys.add(hfrPref.getKey());

}

}

}

private List<String> getSupportedHighFrameRate() {

ArrayList<String> supported = new ArrayList<String>();

supported.add("off");

ListPreference videoQuality = mPreferenceGroup.findPreference(KEY_VIDEO_QUALITY);

ListPreference videoEncoder = mPreferenceGroup.findPreference(KEY_VIDEO_ENCODER);

if (videoQuality == null || videoEncoder == null) return supported;

String videoSizeStr = videoQuality.getValue();

int videoEncoderNum = SettingTranslation.getVideoEncoder(videoEncoder.getValue());

VideoCapabilities videoCapabilities = null;

boolean findVideoEncoder = false;

if (videoSizeStr != null) {

Size videoSize = parseSize(videoSizeStr);

MediaCodecList allCodecs = new MediaCodecList(MediaCodecList.ALL_CODECS);

for (MediaCodecInfo info : allCodecs.getCodecInfos()) {

if (!info.isEncoder() || info.getName().contains("google")) continue;

for (String type : info.getSupportedTypes()) {

if ((videoEncoderNum == MediaRecorder.VideoEncoder.MPEG_4_SP && type.equalsIgnoreCase(MediaFormat.MIMETYPE_VIDEO_MPEG4))

|| (videoEncoderNum == MediaRecorder.VideoEncoder.H263 && type.equalsIgnoreCase(MediaFormat.MIMETYPE_VIDEO_H263))

|| (videoEncoderNum == MediaRecorder.VideoEncoder.H264 && type.equalsIgnoreCase(MediaFormat.MIMETYPE_VIDEO_AVC))

|| (videoEncoderNum == MediaRecorder.VideoEncoder.HEVC && type.equalsIgnoreCase(MediaFormat.MIMETYPE_VIDEO_HEVC))) {

CodecCapabilities codecCapabilities = info.getCapabilitiesForType(type);

videoCapabilities = codecCapabilities.getVideoCapabilities();

findVideoEncoder = true;

break;

}

}

if (findVideoEncoder) break;

}

try {

// Получить текущее видео размер соответствует поддерживаемому fps

Range[] range = getSupportedHighSpeedVideoFPSRange(mCameraId, videoSize);

for (Range r : range) {

// To support HFR for both preview and recording,

// minmal FPS needs to be equal to maximum FPS

if ((int) r.getUpper() == (int) r.getLower()) {

if (videoCapabilities != null) {

if (videoCapabilities.areSizeAndRateSupported(

videoSize.getWidth(), videoSize.getHeight(), (int) r.getUpper())) {

supported.add("hfr" + String.valueOf(r.getUpper()));

supported.add("hsr" + String.valueOf(r.getUpper()));

}

}

}

}

} catch (IllegalArgumentException ex) {

Log.w(TAG, "HFR is not supported for this resolution " + ex);

}

.......

}

return supported;

}2、Framework

\frameworks\base\core\java\android\hardware\camera2\params\StreamConfigurationMap.java

public Range<Integer>[] getHighSpeedVideoFpsRangesFor(Size size) {

// Проверьте выбранное в данный момент видео Поддерживает ли размер HFR?

Integer fpsRangeCount = mHighSpeedVideoSizeMap.get(size);

if (fpsRangeCount == null || fpsRangeCount == 0) {

throw new IllegalArgumentException(String.format(

"Size %s does not support high speed video recording", size));

}

@SuppressWarnings("unchecked")

Range<Integer>[] fpsRanges = new Range[fpsRangeCount];

int i = 0;

// Получить текущее видео Каждый кадр в секунду поддерживается размером

for (HighSpeedVideoConfiguration config : mHighSpeedVideoConfigurations) {

if (size.equals(config.getSize())) {

fpsRanges[i++] = config.getFpsRange();

}

}

return fpsRanges;

}Среди них mHighSpeedVideoConfigurations инициализируется в следующем интерфейсе: \frameworks\base\core\java\android\hardware\camera2\impl\CameraMetadataNative.java.

private StreamConfigurationMap getStreamConfigurationMap() {

StreamConfiguration[] configurations = getBase(

CameraCharacteristics.SCALER_AVAILABLE_STREAM_CONFIGURATIONS);

StreamConfigurationDuration[] minFrameDurations = getBase(

CameraCharacteristics.SCALER_AVAILABLE_MIN_FRAME_DURATIONS);

StreamConfigurationDuration[] stallDurations = getBase(

CameraCharacteristics.SCALER_AVAILABLE_STALL_DURATIONS);

StreamConfiguration[] depthConfigurations = getBase(

CameraCharacteristics.DEPTH_AVAILABLE_DEPTH_STREAM_CONFIGURATIONS);

StreamConfigurationDuration[] depthMinFrameDurations = getBase(

CameraCharacteristics.DEPTH_AVAILABLE_DEPTH_MIN_FRAME_DURATIONS);

StreamConfigurationDuration[] depthStallDurations = getBase(

CameraCharacteristics.DEPTH_AVAILABLE_DEPTH_STALL_DURATIONS); // Получите кайф от слоя camx speed информация о конфигурации видео

HighSpeedVideoConfiguration[] highSpeedVideoConfigurations = getBase(

CameraCharacteristics.CONTROL_AVAILABLE_HIGH_SPEED_VIDEO_CONFIGURATIONS);

ReprocessFormatsMap inputOutputFormatsMap = getBase(

CameraCharacteristics.SCALER_AVAILABLE_INPUT_OUTPUT_FORMATS_MAP);

int[] capabilities = getBase(CameraCharacteristics.REQUEST_AVAILABLE_CAPABILITIES);

boolean listHighResolution = false;

for (int capability : capabilities) {

if (capability == CameraCharacteristics.REQUEST_AVAILABLE_CAPABILITIES_BURST_CAPTURE) {

listHighResolution = true;

break;

}

} // Создайте StreamConfigurationMap и проверьте информацию о конфигурации.

return new StreamConfigurationMap(

configurations, minFrameDurations, stallDurations,

depthConfigurations, depthMinFrameDurations, depthStallDurations,

highSpeedVideoConfigurations, inputOutputFormatsMap,

listHighResolution);

}3、HAL

HAL-слой cameraInfo Он получен при анализе ConfigureStreams во второй части статьи. vendor\qcom\proprietary\camx\src\core\hal\camxhal3.cppпозвонитьpHALDevice->ConfigureStreams(pStreamConfigs);Входитьприезжать Понятно\vendor\qcom\proprietary\camx\src\core\hal\camxhaldevice.cpp

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

// HALDevice::ConfigureStreams

////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

CamxResult HALDevice::ConfigureStreams(

Camera3StreamConfig* pStreamConfigs)

{

CamxResult result = CamxResultSuccess;

// Validate the incoming stream configurations

result = CheckValidStreamConfig(pStreamConfigs); // в позвонил pHWEnvironment->GetCameraInfo(logicalCameraId, &cameraInfo);

if ((StreamConfigModeConstrainedHighSpeed == pStreamConfigs->operationMode) ||

(StreamConfigModeSuperSlowMotionFRC == pStreamConfigs->operationMode))

{

SearchNumBatchedFrames (pStreamConfigs, &m_usecaseNumBatchedFrames, &m_FPSValue);

CAMX_ASSERT(m_usecaseNumBatchedFrames > 1);

}

else

{

// Not a HFR usecase batch frames value need to set to 1.

m_usecaseNumBatchedFrames = 1;

}

...... return result;

}Информация о возможностях платформы и датчика инициализируется в файле \vendor\qcom\proprietary\camx\src\core\camxhwenvironment.cpp.

VOID HwEnvironment::InitCaps()

{

......if (CamxResultSuccess == result)

{ // Возможности платформы и датчика в основном инициализируются с помощью следующих функций:

ProbeImageSensorModules(); //Создаем ImageSensorModuleDataManager, вызываем CreateAllSensorModuleSetManagers во время Initialize(), и файл bin с информацией о датчике будет загружен.

EnumerateDevices();

InitializeSensorSubModules();

InitializeSensorStaticCaps(); //в дальнейшем вызовем ImageSensorModuleData::GetStaticCapsПолучить датчикизcapability result = m_staticEntryMethods.GetStaticCaps(&m_platformCaps[0]);

// copy the static capacity to remaining sensor's

for (UINT index = 1; index < m_numberSensors; index++)

{

Utils::Memcpy(&m_platformCaps[index], &m_platformCaps[0], sizeof(m_platformCaps[0]));

}

if (NULL != m_pOEMInterface->pInitializeExtendedPlatformStaticCaps)

{

m_pOEMInterface->pInitializeExtendedPlatformStaticCaps(&m_platformCaps[0], m_numberSensors);

}

}

......

}При создании ImageSensorModuleDataManager в ProbeImageSensorModules bin-файл модуля будет загружен для получения информации о датчике: \vendor\qcom\proprietary\camx\src\core\camximagesensormoduledatamanager.cpp

CamxResult ImageSensorModuleDataManager::CreateAllSensorModuleSetManagers()

{

CamxResult result = CamxResultSuccess;

ImageSensorModuleSetManager* pSensorModuleSetManager = NULL;

UINT16 fileCount = 0;

CHAR binaryFiles[MaxSensorModules][FILENAME_MAX];

// Текущий модуль камеры, используемый 8150mtp, — ov12a10 (широкий), поэтому будет загружен com.qti.sensormodule.ofilm_ov12a10.bin.

fileCount = OsUtils::GetFilesFromPath(SensorModulesPath, FILENAME_MAX, &binaryFiles[0][0], "*", "sensormodule", "*", "bin");

CAMX_ASSERT((fileCount != 0) && (fileCount < MaxSensorModules));

m_numSensorModuleManagers = 0;

if ((fileCount == 0) || (fileCount >= MaxSensorModules))

{

CAMX_LOG_ERROR(CamxLogGroupSensor, "Invalid fileCount", fileCount);

result = CamxResultEFailed;

}

else

{

for (UINT i = 0; i < fileCount; i++)

{

result = GetSensorModuleManagerObj(&binaryFiles[i][0], &pSensorModuleSetManager);

if (CamxResultSuccess == result)

{

m_pSensorModuleManagers[m_numSensorModuleManagers++] = pSensorModuleSetManager;

}

else

{

CAMX_LOG_ERROR(CamxLogGroupSensor,

"GetSensorModuleManagerObj failed i: %d binFile: %s",

i, &binaryFiles[i][0]);

}

}

CAMX_ASSERT(m_numSensorModuleManagers > 0);

if (0 == m_numSensorModuleManagers)

{

CAMX_LOG_ERROR(CamxLogGroupSensor, "Invalid number of sensor module managers");

result = CamxResultEFailed;

}

}

return result;

}Получите диаграмму последовательности вызовов статических возможностей датчика:

Вышеуказанный com.qti.sensormodule.ofilm_ov12a10.bin можно изменить для компиляции и обновления соответствующего XML. Путь: \vendor\qcom\proprietary\chi-cdk\vendor\sensor\default\ov12a10.

Из ov12a10_sensor.xml вы можете видеть, что 1080p поддерживает скорость до 60 кадров в секунду:

Следует отметить, что после изменения параметра xmlframeRate на 120 опция 120 кадров в секунду действительно будет добавлена в настройки приложения после обновления .bin. Однако, если выходная мощность датчика может достигать только 1080p при 60 кадрах в секунду, запись. результат застрянет из-за датчика. Частота выходных кадров ниже, чем скорость кодирования, поэтому вставляется много повторяющихся кадров.

PS: Вариант использования HFR требует частоты кадров, большей или равной 120 кадрам в секунду.

Углубленный анализ переполнения памяти CUDA: OutOfMemoryError: CUDA не хватает памяти. Попыталась выделить 3,21 Ги Б (GPU 0; всего 8,00 Ги Б).

[Решено] ошибка установки conda. Среда решения: не удалось выполнить первоначальное зависание. Повторная попытка с помощью файла (графическое руководство).

Прочитайте нейросетевую модель Трансформера в одной статье

.ART Теплые зимние предложения уже открыты

Сравнительная таблица описания кодов ошибок Amap

Уведомление о последних правилах Points Mall в декабре 2022 года.

Даже новички могут быстро приступить к работе с легким сервером приложений.

Взгляд на RSAC 2024|Защита конфиденциальности в эпоху больших моделей

Вы используете ИИ каждый день и до сих пор не знаете, как ИИ дает обратную связь? Одна статья для понимания реализации в коде Python общих функций потерь генеративных моделей + анализ принципов расчета.

Используйте (внутренний) почтовый ящик для образовательных учреждений, чтобы использовать Microsoft Family Bucket (1T дискового пространства на одном диске и версию Office 365 для образовательных учреждений)

Руководство по началу работы с оперативным проектом (7) Практическое сочетание оперативного письма — оперативного письма на основе интеллектуальной системы вопросов и ответов службы поддержки клиентов

[docker] Версия сервера «Чтение 3» — создайте свою собственную программу чтения веб-текста

Обзор Cloud-init и этапы создания в рамках PVE

Корпоративные пользователи используют пакет регистрационных ресурсов для регистрации ICP для веб-сайта и активации оплаты WeChat H5 (с кодом платежного узла версии API V3)

Подробное объяснение таких показателей производительности с высоким уровнем параллелизма, как QPS, TPS, RT и пропускная способность.

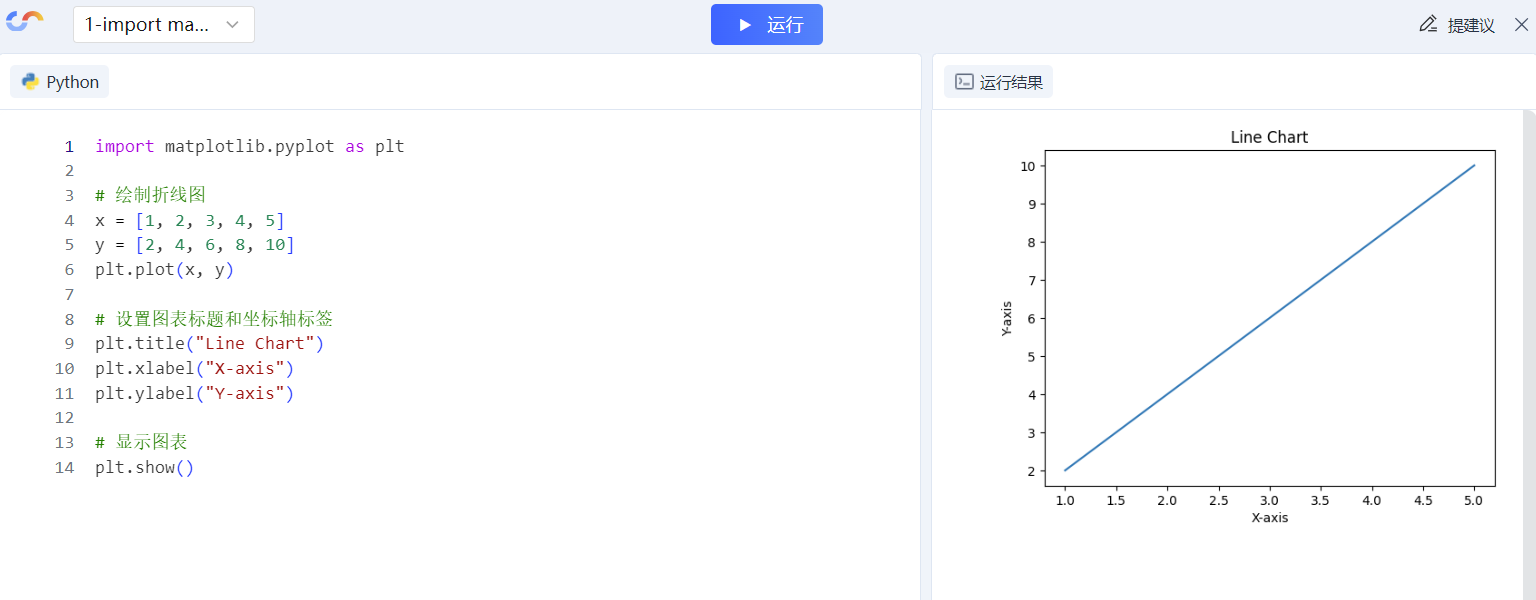

Удачи в конкурсе Python Essay Challenge, станьте первым, кто испытает новую функцию сообщества [Запускать блоки кода онлайн] и выиграйте множество изысканных подарков!

[Техническая посадка травы] Кровавая рвота и отделка позволяют вам необычным образом ощипывать гусиные перья! Не распространяйте информацию! ! !

[Официальное ограниченное по времени мероприятие] Сейчас ноябрь, напишите и получите приз

Прочтите это в одной статье: Учебник для няни по созданию сервера Huanshou Parlu на базе CVM-сервера.

Cloud Native | Что такое CRD (настраиваемые определения ресурсов) в K8s?

Как использовать Cloudflare CDN для настройки узла (CF самостоятельно выбирает IP) Гонконг, Китай/Азия узел/сводка и рекомендации внутреннего высокоскоростного IP-сегмента

Дополнительные правила вознаграждения амбассадоров акции в марте 2023 г.

Можно ли открыть частный сервер Phantom Beast Palu одним щелчком мыши? Супер простой урок для начинающих! (Прилагается метод обновления сервера)

[Играйте с Phantom Beast Palu] Обновите игровой сервер Phantom Beast Pallu одним щелчком мыши

Maotouhu делится: последний доступный внутри страны адрес склада исходного образа Docker 2024 года (обновлено 1 декабря)

Кодирование Base64 в MultipartFile

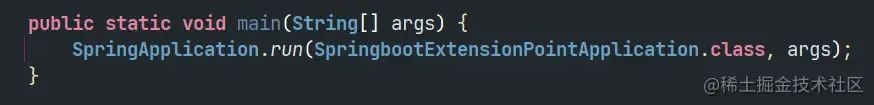

5 точек расширения SpringBoot, супер практично!

Глубокое понимание сопоставления индексов Elasticsearch.