Изучение и использование pytest 18. Как использовать файл конфигурации pytest.ini?

1 Роль конфигурационных файлов

- Изменять

pytestКак это работает; pytest.iniэто фиксированный файл;pytest.iniИспользуется для чтения информации о конфигурации.。

2 формата файла

# Имя файла: pytest.ini

[pytest]

addopts =

xfail_strict = 3. Просмотрите параметры pytest.ini.

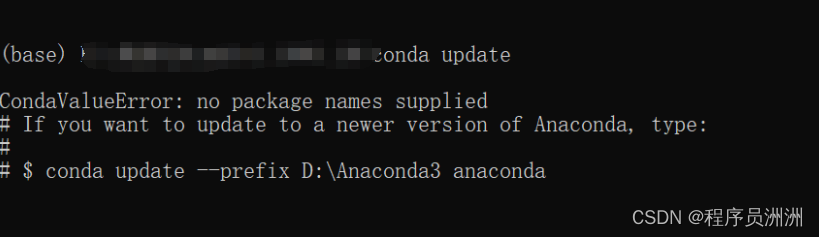

- Используйте команду:

pytest --help- следующее:

C:\Users\Administrator>pytest --help

usage: pytest [options] [file_or_dir] [file_or_dir] [...]

positional arguments:

file_or_dir

general:

-k EXPRESSION only run tests which match the given substring expression. An expression is a python evaluatable

expression where all names are substring-matched against test names and their parent classes.

Example: -k 'test_method or test_other' matches all test functions and classes whose name

contains 'test_method' or 'test_other', while -k 'not test_method' matches those that don't

contain 'test_method' in their names. -k 'not test_method and not test_other' will eliminate the

matches. Additionally keywords are matched to classes and functions containing extra names in

their 'extra_keyword_matches' set, as well as functions which have names assigned directly to

them. The matching is case-insensitive.

-m MARKEXPR only run tests matching given mark expression.

For example: -m 'mark1 and not mark2'.

--markers show markers (builtin, plugin and per-project ones).

-x, --exitfirst exit instantly on first error or failed test.

--fixtures, --funcargs

show available fixtures, sorted by plugin appearance (fixtures with leading '_' are only shown

with '-v')

--fixtures-per-test show fixtures per test

--pdb start the interactive Python debugger on errors or KeyboardInterrupt.

--pdbcls=modulename:classname

start a custom interactive Python debugger on errors. For example:

--pdbcls=IPython.terminal.debugger:TerminalPdb

--trace Immediately break when running each test.

--capture=method per-test capturing method: one of fd|sys|no|tee-sys.

-s shortcut for --capture=no.

--runxfail report the results of xfail tests as if they were not marked

--lf, --last-failed rerun only the tests that failed at the last run (or all if none failed)

--ff, --failed-first run all tests, but run the last failures first.

This may re-order tests and thus lead to repeated fixture setup/teardown.

--nf, --new-first run tests from new files first, then the rest of the tests sorted by file mtime

--cache-show=[CACHESHOW]

show cache contents, don't perform collection or tests. Optional argument: glob (default: '*').

--cache-clear remove all cache contents at start of test run.

--lfnf={all,none}, --last-failed-no-failures={all,none}

which tests to run with no previously (known) failures.

--sw, --stepwise exit on test failure and continue from last failing test next time

--sw-skip, --stepwise-skip

ignore the first failing test but stop on the next failing test

--allure-severities=SEVERITIES_SET

Comma-separated list of severity names.

Tests only with these severities will be run.

Possible values are: blocker, critical, normal, minor, trivial.

--allure-epics=EPICS_SET

Comma-separated list of epic names.

Run tests that have at least one of the specified feature labels.

--allure-features=FEATURES_SET

Comma-separated list of feature names.

Run tests that have at least one of the specified feature labels.

--allure-stories=STORIES_SET

Comma-separated list of story names.

Run tests that have at least one of the specified story labels.

--allure-link-pattern=LINK_TYPE:LINK_PATTERN

Url pattern for link type. Allows short links in test,

like 'issue-1'. Text will be formatted to full url with python

str.format().

reporting:

--durations=N show N slowest setup/test durations (N=0 for all).

--durations-min=N Minimal duration in seconds for inclusion in slowest list. Default 0.005

-v, --verbose increase verbosity.

--no-header disable header

--no-summary disable summary

-q, --quiet decrease verbosity.

--verbosity=VERBOSE set verbosity. Default is 0.

-r chars show extra test summary info as specified by chars: (f)ailed, (E)rror, (s)kipped, (x)failed,

(X)passed, (p)assed, (P)assed with output, (a)ll except passed (p/P), or (A)ll. (w)arnings are

enabled by default (see --disable-warnings), 'N' can be used to reset the list. (default: 'fE').

--disable-warnings, --disable-pytest-warnings

disable warnings summary

-l, --showlocals show locals in tracebacks (disabled by default).

--tb=style traceback print mode (auto/long/short/line/native/no).

--show-capture={no,stdout,stderr,log,all}

Controls how captured stdout/stderr/log is shown on failed tests. Default is 'all'.

--full-trace don't cut any tracebacks (default is to cut).

--color=color color terminal output (yes/no/auto).

--code-highlight={yes,no}

Whether code should be highlighted (only if --color is also enabled)

--pastebin=mode send failed|all info to bpaste.net pastebin service.

--junit-xml=path create junit-xml style report file at given path.

--junit-prefix=str prepend prefix to classnames in junit-xml output

--html=path create html report file at given path.

--self-contained-html

create a self-contained html file containing all necessary styles, scripts, and images - this

means that the report may not render or function where CSP restrictions are in place (see

https://developer.mozilla.org/docs/Web/Security/CSP)

--css=path append given css file content to report style file.

pytest-warnings:

-W PYTHONWARNINGS, --pythonwarnings=PYTHONWARNINGS

set which warnings to report, see -W option of python itself.

--maxfail=num exit after first num failures or errors.

--strict-config any warnings encountered while parsing the `pytest` section of the configuration file raise

errors.

--strict-markers markers not registered in the `markers` section of the configuration file raise errors.

--strict (deprecated) alias to --strict-markers.

-c file load configuration from `file` instead of trying to locate one of the implicit configuration

files.

--continue-on-collection-errors

Force test execution even if collection errors occur.

--rootdir=ROOTDIR Define root directory for tests. Can be relative path: 'root_dir', './root_dir',

'root_dir/another_dir/'; absolute path: '/home/user/root_dir'; path with variables:

'$HOME/root_dir'.

collection:

--collect-only, --co only collect tests, don't execute them.

--pyargs try to interpret all arguments as python packages.

--ignore=path ignore path during collection (multi-allowed).

--ignore-glob=path ignore path pattern during collection (multi-allowed).

--deselect=nodeid_prefix

deselect item (via node id prefix) during collection (multi-allowed).

--confcutdir=dir only load conftest.py's relative to specified dir.

--noconftest Don't load any conftest.py files.

--keep-duplicates Keep duplicate tests.

--collect-in-virtualenv

Don't ignore tests in a local virtualenv directory

--import-mode={prepend,append,importlib}

prepend/append to sys.path when importing test modules and conftest files, default is to

prepend.

--doctest-modules run doctests in all .py modules

--doctest-report={none,cdiff,ndiff,udiff,only_first_failure}

choose another output format for diffs on doctest failure

--doctest-glob=pat doctests file matching pattern, default: test*.txt

--doctest-ignore-import-errors

ignore doctest ImportErrors

--doctest-continue-on-failure

for a given doctest, continue to run after the first failure

test session debugging and configuration:

--basetemp=dir base temporary directory for this test run.(warning: this directory is removed if it exists)

-V, --version display pytest version and information about plugins.When given twice, also display information

about plugins.

-h, --help show help message and configuration info

-p name early-load given plugin module name or entry point (multi-allowed).

To avoid loading of plugins, use the `no:` prefix, e.g. `no:doctest`.

--trace-config trace considerations of conftest.py files.

--debug store internal tracing debug information in 'pytestdebug.log'.

-o OVERRIDE_INI, --override-ini=OVERRIDE_INI

override ini option with "option=value" style, e.g. `-o xfail_strict=True -o cache_dir=cache`.

--assert=MODE Control assertion debugging tools.

'plain' performs no assertion debugging.

'rewrite' (the default) rewrites assert statements in test modules on import to provide assert

expression information.

--setup-only only setup fixtures, do not execute tests.

--setup-show show setup of fixtures while executing tests.

--setup-plan show what fixtures and tests would be executed but don't execute anything.

logging:

--log-level=LEVEL level of messages to catch/display.

Not set by default, so it depends on the root/parent log handler's effective level, where it is

"WARNING" by default.

--log-format=LOG_FORMAT

log format as used by the logging module.

--log-date-format=LOG_DATE_FORMAT

log date format as used by the logging module.

--log-cli-level=LOG_CLI_LEVEL

cli logging level.

--log-cli-format=LOG_CLI_FORMAT

log format as used by the logging module.

--log-cli-date-format=LOG_CLI_DATE_FORMAT

log date format as used by the logging module.

--log-file=LOG_FILE path to a file when logging will be written to.

--log-file-level=LOG_FILE_LEVEL

log file logging level.

--log-file-format=LOG_FILE_FORMAT

log format as used by the logging module.

--log-file-date-format=LOG_FILE_DATE_FORMAT

log date format as used by the logging module.

--log-auto-indent=LOG_AUTO_INDENT

Auto-indent multiline messages passed to the logging module. Accepts true|on, false|off or an

integer.

reporting:

--alluredir=DIR Generate Allure report in the specified directory (may not exist)

--clean-alluredir Clean alluredir folder if it exists

--allure-no-capture Do not attach pytest captured logging/stdout/stderr to report

coverage reporting with distributed testing support:

--cov=[SOURCE] Path or package name to measure during execution (multi-allowed). Use --cov= to not do any

source filtering and record everything.

--cov-report=TYPE Type of report to generate: term, term-missing, annotate, html, xml (multi-allowed). term, term-

missing may be followed by ":skip-covered". annotate, html and xml may be followed by ":DEST"

where DEST specifies the output location. Use --cov-report= to not generate any output.

--cov-config=PATH Config file for coverage. Default: .coveragerc

--no-cov-on-fail Do not report coverage if test run fails. Default: False

--no-cov Disable coverage report completely (useful for debuggers). Default: False

--cov-fail-under=MIN Fail if the total coverage is less than MIN.

--cov-append Do not delete coverage but append to current. Default: False

--cov-branch Enable branch coverage.

--cov-context=CONTEXT

Dynamic contexts to use. "test" for now.

forked subprocess test execution:

--forked box each test run in a separate process (unix)

re-run failing tests to eliminate flaky failures:

--only-rerun=ONLY_RERUN

If passed, only rerun errors matching the regex provided. Pass this flag multiple times to

accumulate a list of regexes to match

--reruns=RERUNS number of times to re-run failed tests. defaults to 0.

--reruns-delay=RERUNS_DELAY

add time (seconds) delay between reruns.

--rerun-except=RERUN_EXCEPT

If passed, only rerun errors other than matching the regex provided. Pass this flag multiple

times to accumulate a list of regexes to match

distributed and subprocess testing:

-n numprocesses, --numprocesses=numprocesses

shortcut for '--dist=load --tx=NUM*popen', you can use 'auto' here for auto detection CPUs

number on host system and it will be 0 when used with --pdb

--maxprocesses=maxprocesses

limit the maximum number of workers to process the tests when using --numprocesses=auto

--max-worker-restart=MAXWORKERRESTART, --max-slave-restart=MAXWORKERRESTART

maximum number of workers that can be restarted when crashed (set to zero to disable this

feature)

'--max-slave-restart' option is deprecated and will be removed in a future release

--dist=distmode set mode for distributing tests to exec environments.

each: send each test to all available environments.

load: load balance by sending any pending test to any available environment.

loadscope: load balance by sending pending groups of tests in the same scope to any available

environment.

loadfile: load balance by sending test grouped by file to any available environment.

(default) no: run tests inprocess, don't distribute.

--tx=xspec add a test execution environment. some examples: --tx popen//python=python2.5 --tx

socket=192.168.1.102:8888 --tx ssh=user@codespeak.net//chdir=testcache

-d load-balance tests. shortcut for '--dist=load'

--rsyncdir=DIR add directory for rsyncing to remote tx nodes.

--rsyncignore=GLOB add expression for ignores when rsyncing to remote tx nodes.

--boxed backward compatibility alias for pytest-forked --forked

-f, --looponfail run tests in subprocess, wait for modified files and re-run failing test set until all pass.

custom options:

--metadata=key value additional metadata.

--count=COUNT Number of times to repeat each test

--repeat-scope={function,class,module,session}

Scope for repeating tests

[pytest] ini-options in the first pytest.ini|tox.ini|setup.cfg file found:

markers (linelist): markers for test functions

empty_parameter_set_mark (string):

default marker for empty parametersets

norecursedirs (args): directory patterns to avoid for recursion

testpaths (args): directories to search for tests when no files or directories are given in the command line.

filterwarnings (linelist):

Each line specifies a pattern for warnings.filterwarnings. Processed after -W/--pythonwarnings.

usefixtures (args): list of default fixtures to be used with this project

python_files (args): glob-style file patterns for Python test module discovery

python_classes (args):

prefixes or glob names for Python test class discovery

python_functions (args):

prefixes or glob names for Python test function and method discovery

disable_test_id_escaping_and_forfeit_all_rights_to_community_support (bool):

disable string escape non-ascii characters, might cause unwanted side effects(use at your own

risk)

console_output_style (string):

console output: "classic", or with additional progress information ("progress" (percentage) |

"count").

xfail_strict (bool): default for the strict parameter of xfail markers when not given explicitly (default: False)

enable_assertion_pass_hook (bool):

Enables the pytest_assertion_pass hook.Make sure to delete any previously generated pyc cache

files.

junit_suite_name (string):

Test suite name for JUnit report

junit_logging (string):

Write captured log messages to JUnit report: one of no|log|system-out|system-err|out-err|all

junit_log_passing_tests (bool):

Capture log information for passing tests to JUnit report:

junit_duration_report (string):

Duration time to report: one of total|call

junit_family (string):

Emit XML for schema: one of legacy|xunit1|xunit2

doctest_optionflags (args):

option flags for doctests

doctest_encoding (string):

encoding used for doctest files

cache_dir (string): cache directory path.

log_level (string): default value for --log-level

log_format (string): default value for --log-format

log_date_format (string):

default value for --log-date-format

log_cli (bool): enable log display during test run (also known as "live logging").

log_cli_level (string):

default value for --log-cli-level

log_cli_format (string):

default value for --log-cli-format

log_cli_date_format (string):

default value for --log-cli-date-format

log_file (string): default value for --log-file

log_file_level (string):

default value for --log-file-level

log_file_format (string):

default value for --log-file-format

log_file_date_format (string):

default value for --log-file-date-format

log_auto_indent (string):

default value for --log-auto-indent

faulthandler_timeout (string):

Dump the traceback of all threads if a test takes more than TIMEOUT seconds to finish.

addopts (args): extra command line options

minversion (string): minimally required pytest version

required_plugins (args):

plugins that must be present for pytest to run

rsyncdirs (pathlist): list of (relative) paths to be rsynced for remote distributed testing.

rsyncignore (pathlist):

list of (relative) glob-style paths to be ignored for rsyncing.

looponfailroots (pathlist):

directories to check for changes

environment variables:

PYTEST_ADDOPTS extra command line options

PYTEST_PLUGINS comma-separated plugins to load during startup

PYTEST_DISABLE_PLUGIN_AUTOLOAD set to disable plugin auto-loading

PYTEST_DEBUG set to enable debug tracing of pytest's internals

to see available markers type: pytest --markers

to see available fixtures type: pytest --fixtures

(shown according to specified file_or_dir or current dir if not specified; fixtures with leading '_' are only shown with the '-v' option- в

pytest.iniСодержание опцииследующее:

[pytest] ini-options in the first pytest.ini|tox.ini|setup.cfg file found:

markers (linelist): markers for test functions

empty_parameter_set_mark (string):

default marker for empty parametersets

norecursedirs (args): directory patterns to avoid for recursion

testpaths (args): directories to search for tests when no files or directories are given in the command line.

filterwarnings (linelist):

Each line specifies a pattern for warnings.filterwarnings. Processed after -W/--pythonwarnings.

usefixtures (args): list of default fixtures to be used with this project

python_files (args): glob-style file patterns for Python test module discovery

python_classes (args):

prefixes or glob names for Python test class discovery

python_functions (args):

prefixes or glob names for Python test function and method discovery

disable_test_id_escaping_and_forfeit_all_rights_to_community_support (bool):

disable string escape non-ascii characters, might cause unwanted side effects(use at your own

risk)

console_output_style (string):

console output: "classic", or with additional progress information ("progress" (percentage) |

"count").

xfail_strict (bool): default for the strict parameter of xfail markers when not given explicitly (default: False)

enable_assertion_pass_hook (bool):

Enables the pytest_assertion_pass hook.Make sure to delete any previously generated pyc cache

files.

junit_suite_name (string):

Test suite name for JUnit report

junit_logging (string):

Write captured log messages to JUnit report: one of no|log|system-out|system-err|out-err|all

junit_log_passing_tests (bool):

Capture log information for passing tests to JUnit report:

junit_duration_report (string):

Duration time to report: one of total|call

junit_family (string):

Emit XML for schema: one of legacy|xunit1|xunit2

doctest_optionflags (args):

option flags for doctests

doctest_encoding (string):

encoding used for doctest files

cache_dir (string): cache directory path.

log_level (string): default value for --log-level

log_format (string): default value for --log-format

log_date_format (string):

default value for --log-date-format

log_cli (bool): enable log display during test run (also known as "live logging").

log_cli_level (string):

default value for --log-cli-level

log_cli_format (string):

default value for --log-cli-format

log_cli_date_format (string):

default value for --log-cli-date-format

log_file (string): default value for --log-file

log_file_level (string):

default value for --log-file-level

log_file_format (string):

default value for --log-file-format

log_file_date_format (string):

default value for --log-file-date-format

log_auto_indent (string):

default value for --log-auto-indent

faulthandler_timeout (string):

Dump the traceback of all threads if a test takes more than TIMEOUT seconds to finish.

addopts (args): extra command line options

minversion (string): minimally required pytest version

required_plugins (args):

plugins that must be present for pytest to run

rsyncdirs (pathlist): list of (relative) paths to be rsynced for remote distributed testing.

rsyncignore (pathlist):

list of (relative) glob-style paths to be ignored for rsyncing.

looponfailroots (pathlist):

directories to check for changes4 места хранения

- имя должно быть

pytest.ini,помещен вКорневой каталог проектаВниз。

5 распространенных вариантов

5.1 marks

- к Внизэто использовать

@pytest.mark.xxxметод:

# -*- coding:utf-8 -*-

# автор:Насекомые без границ

# Дата: 15 марта 2023 г.

# Имя файла: test_ini.py

# Функция: использование pytest.ini.

# Контактное лицо: VX (Ноама Нельсон)

# Блог: https://blog.csdn.net/NoamaNelson

import pytest

@pytest.mark.name

def one_name():

print("name is xiaoming")

@pytest.mark.old

def two_old():

print("old is 20")

def th_weight():

print("weight is 50")

@pytest.mark.case

class TestCase:

def test_case_01(self):

print("case_01")

def test_case_02(self):

print("case_02")

if __name__ == '__main__':

pytest.main(["-v", "test_ini.py", "-m=case"])- Теги подвержены ошибкам,можно написать

pytest.ini:

# pytest.ini

[pytest]

markers =

name: run the name

old: run the old

case: run the test_case- Отмечено для использования

pytest --markersПроверять:

(venv) F:\pytest_study\test_case\test_i>pytest --markers

@pytest.mark.name: run the name

@pytest.mark.old: run the old

@pytest.mark.case: run the test_case

@pytest.mark.forked: Always fork for this test.

@pytest.mark.flaky(reruns=1, reruns_delay=0): mark test to re-run up to 'reruns' times. Add a delay of 'rerun

s_delay' seconds between re-runs.

@pytest.mark.repeat(n): run the given test function `n` times.

@pytest.mark.run: specify ordering information for when tests should run in relation to one another. Provided

by pytest-ordering. See also: http://pytest-ordering.readthedocs.org/

@pytest.mark.no_cover: disable coverage for this test.

@pytest.mark.allure_label: allure label marker

@pytest.mark.allure_link: allure link marker

@pytest.mark.allure_display_name: allure test name marker

@pytest.mark.allure_description: allure description

@pytest.mark.allure_description_html: allure description html

@pytest.mark.filterwarnings(warning): add a warning filter to the given test. see https://docs.pytest.org/en/

stable/warnings.html#pytest-mark-filterwarnings

@pytest.mark.skip(reason=None): skip the given test function with an optional reason. Example: skip(reason="n

o way of currently testing this") skips the test.

@pytest.mark.skipif(condition, ..., *, reason=...): skip the given test function if any of the conditions eva

luate to True. Example: skipif(sys.platform == 'win32') skips the test if we are on the win32 platform. See h

ttps://docs.pytest.org/en/stable/reference.html#pytest-mark-skipif

@pytest.mark.xfail(condition, ..., *, reason=..., run=True, raises=None, strict=xfail_strict): mark the test

function as an expected failure if any of the conditions evaluate to True. Optionally specify a reason for be

tter reporting and run=False if you don't even want to execute the test function. If only specific exception(

s) are expected, you can list them in raises, and if the test fails in other ways, it will be reported as a t

rue failure. See https://docs.pytest.org/en/stable/reference.html#pytest-mark-xfail

@pytest.mark.parametrize(argnames, argvalues): call a test function multiple times passing in different argum

ents in turn. argvalues generally needs to be a list of values if argnames specifies only one name or a list

of tuples of values if argnames specifies multiple names. Example: @parametrize('arg1', [1,2]) would lead to

two calls of the decorated test function, one with arg1=1 and another with arg1=2.see https://docs.pytest.org

/en/stable/parametrize.html for more info and examples.

@pytest.mark.usefixtures(fixturename1, fixturename2, ...): mark tests as needing all of the specified fixture

s. see https://docs.pytest.org/en/stable/fixture.html#usefixtures

@pytest.mark.tryfirst: mark a hook implementation function such that the plugin machinery will try to call it

first/as early as possible.

@pytest.mark.trylast: mark a hook implementation function such that the plugin machinery will try to call it

last/as late as possible.5.2 xfail_strict

- настраивать

xfail_strict = TrueМожно пометить их как@pytest.mark.xfailНо на самом деле отображаетсяXPASSтестовых случаев были признаны неудачными; - Как написать pytest.ini:

[pytest]

markers =

name: run the name

old: run the old

case: run the test_case

xfail_strict = True- код для:

# -*- coding:utf-8 -*-

# автор:Насекомые без границ

# Дата: 15 марта 2023 г.

# Имя файла: test_ini01.py

# Функция: использование pytest.ini.

# Контактное лицо: VX (Ноама Нельсон)

# Блог: https://blog.csdn.net/NoamaNelson

import pytest

@pytest.mark.name

def test_name():

print("name is xiaoming")

@pytest.mark.old

def test_old():

print("old is 20")

@pytest.mark.xfail()

def test_weight():

a = 30

b = 60

assert a != b

@pytest.mark.case

class TestCase:

def test_case_01(self):

print("case_01")

def test_case_02(self):

print("case_02")

if __name__ == '__main__':

pytest.main(["-v", "test_ini01.py", "-m=case"])- Результаты следующие. Вы можете видеть, что результат теста — неудачный:

test_ini01.py::test_name PASSED [ 20%]name is xiaoming

test_ini01.py::test_old PASSED [ 40%]old is 20

test_ini01.py::test_weight FAILED [ 60%]

test_case\test_i\test_ini01.py:18 (test_weight)

[XPASS(strict)]

test_ini01.py::TestCase::test_case_01 PASSED [ 80%]case_01

test_ini01.py::TestCase::test_case_02 PASSED [100%]case_02

================================== FAILURES ===================================

_________________________________ test_weight _________________________________

[XPASS(strict)]

=========================== short test summary info ===========================

FAILED test_ini01.py::test_weight

========================= 1 failed, 4 passed in 0.05s =========================5.3 addopts

addoptsпараметр Параметры командной строки по умолчанию можно изменить;- Например, после завершения теста формируется отчет, и в случае неудачи он перезапускается 3 раза, всего 2 раза, и тестируется через раздачу:

addopts = -v --reruns=2 --count=2 --html=reports.html --self-contained-html -n=auto- Добавьте приведенную выше команду

pytest.ini,Нет необходимости повторно писать этот параметр в командной строке:

[pytest]

markers =

name: run the name

old: run the old

case: run the test_case

xfail_strict = True

addopts = -v --reruns=2 --count=2 --html=reports.html --self-contained-html -n=auto- Результаты запуска:

test_ini01.py::test_old[1-2]

test_ini01.py::test_old[2-2]

test_ini01.py::test_weight[1-2]

test_ini01.py::test_weight[2-2]

test_ini01.py::test_name[1-2]

test_ini01.py::TestCase::test_case_01[2-2]

test_ini01.py::test_name[2-2]

test_ini01.py::TestCase::test_case_01[1-2]

[gw3] [ 10%] PASSED test_ini01.py::test_old[2-2]

[gw6] [ 20%] PASSED test_ini01.py::TestCase::test_case_01[1-2]

[gw2] [ 30%] PASSED test_ini01.py::test_old[1-2]

[gw0] [ 40%] PASSED test_ini01.py::test_name[1-2]

test_ini01.py::TestCase::test_case_02[1-2]

[gw0] [ 50%] PASSED test_ini01.py::TestCase::test_case_02[1-2]

[gw1] [ 60%] PASSED test_ini01.py::test_name[2-2]

test_ini01.py::TestCase::test_case_02[2-2]

[gw7] [ 70%] PASSED test_ini01.py::TestCase::test_case_01[2-2]

[gw1] [ 80%] PASSED test_ini01.py::TestCase::test_case_02[2-2]

[gw5] [ 90%] RERUN test_ini01.py::test_weight[2-2]

test_ini01.py::test_weight[2-2]

[gw4] [100%] RERUN test_ini01.py::test_weight[1-2]

test_ini01.py::test_weight[1-2]

[gw5] [100%] RERUN test_ini01.py::test_weight[2-2]

test_ini01.py::test_weight[2-2]

[gw5] [100%] FAILED test_ini01.py::test_weight[2-2]

test_case\test_i\test_ini01.py:18 (test_weight[2-2])

[XPASS(strict)]

[gw4] [100%] RERUN test_ini01.py::test_weight[1-2]

test_ini01.py::test_weight[1-2]

[gw4] [100%] FAILED test_ini01.py::test_weight[1-2]

test_case\test_i\test_ini01.py:18 (test_weight[1-2])

[XPASS(strict)]

================================== FAILURES ===================================

______________________________ test_weight[2-2] _______________________________

[gw5] win32 -- Python 3.7.0 F:\pytest_study\venv\Scripts\python.exe

[XPASS(strict)]

______________________________ test_weight[1-2] _______________________________

[gw4] win32 -- Python 3.7.0 F:\pytest_study\venv\Scripts\python.exe

[XPASS(strict)]

-- generated html file: file://F:\pytest_study\test_case\test_i\reports.html --

=========================== short test summary info ===========================

FAILED test_ini01.py::test_weight[2-2]

FAILED test_ini01.py::test_weight[1-2]

==================== 2 failed, 8 passed, 4 rerun in 3.11s =====================addoptsОбычно используетсяпараметр:

параметр | иллюстрировать |

|---|---|

| Указывает выходную отладочную информацию,Используется для отображения тестовых функций |

| Перед добавлением печатается только имя модуля. После добавления v печатается имя класса, имя модуля и имя метода для отображения более подробной информации. |

| Указывает, что отображаются только общие результаты теста. |

| Эти два параметра можно использовать вместе |

| Поддержка многопоточных или распределенных тестовых случаев.(Необходимо установить необходимые условия |

| генерировать |

5.4 log_cli

- Консоль выводит логи в режиме реального времени;

log_cli=TrueилиFalse(по умолчанию);log_cli=True:

collecting ... collected 5 items

test_ini01.py::test_name PASSED [ 20%]name is xiaoming

test_ini01.py::test_old PASSED [ 40%]old is 20

test_ini01.py::test_weight FAILED [ 60%]

test_case\test_i\test_ini01.py:18 (test_weight)

[XPASS(strict)]

test_ini01.py::TestCase::test_case_01 PASSED [ 80%]case_01

test_ini01.py::TestCase::test_case_02 PASSED [100%]case_02

================================== FAILURES ===================================

_________________________________ test_weight _________________________________

[XPASS(strict)]

=========================== short test summary info ===========================

FAILED test_ini01.py::test_weight

========================= 1 failed, 4 passed in 0.05s =========================- добавлять

log_cli=Trueпосле,Какой из них хорошо виден?package、moduleВниз Выполнение варианта использования。

5.5 norecursedirs

- Когда pytest собирает тестовые примеры, он рекурсивно обходит все подкаталоги;

- Если некоторые каталоги не нужно выполнять,доступный

norecursedirsпараметрупрощатьpytestпоисковая работа; - Метод заключается в следующем: разделите несколько путей пробелами:

norecursedirs = .* build dist CVS _darcs {arch} *.egg- например:

[pytest]

markers =

name: run the name

old: run the old

case: run the test_case

xfail_strict = True

# addopts = -v --reruns=2 --count=2 --html=reports.html --self-contained-html -n=auto

log_cli = False

norecursedirs = .* build dist CVS _darcs {arch} *.egg report test_case log- Также можно использовать

norecursedirsИсправлятьpytestизпо умолчанию Правила сбора вариантов использования; - Правила сбора вариантов использования по умолчанию:

Имя файла начинается с test_*.py файлы и *_test.py

Пройти тест_ функция в начале

Пройти тест Класс в начале не может содержать __init__ метод

Пройти тест_ в классе в началеметод- Например, измените его на:

[pytest]

python_files = test* test_* *_test

python_classes = Test* test*

python_functions = test_* test*6. Исходный код pytest.ini, рассматриваемый в этой статье.

[pytest]

markers =

name: run the name

old: run the old

case: run the test_case

xfail_strict = True

addopts = -v --reruns=2 --count=2 --html=reports.html --self-contained-html -n=auto

log_cli = False

norecursedirs = .* build dist CVS _darcs {arch} *.egg report test_case log

python_files = test* test_* *_test

python_classes = Test* test*

python_functions = test_* test*

Углубленный анализ переполнения памяти CUDA: OutOfMemoryError: CUDA не хватает памяти. Попыталась выделить 3,21 Ги Б (GPU 0; всего 8,00 Ги Б).

[Решено] ошибка установки conda. Среда решения: не удалось выполнить первоначальное зависание. Повторная попытка с помощью файла (графическое руководство).

Прочитайте нейросетевую модель Трансформера в одной статье

.ART Теплые зимние предложения уже открыты

Сравнительная таблица описания кодов ошибок Amap

Уведомление о последних правилах Points Mall в декабре 2022 года.

Даже новички могут быстро приступить к работе с легким сервером приложений.

Взгляд на RSAC 2024|Защита конфиденциальности в эпоху больших моделей

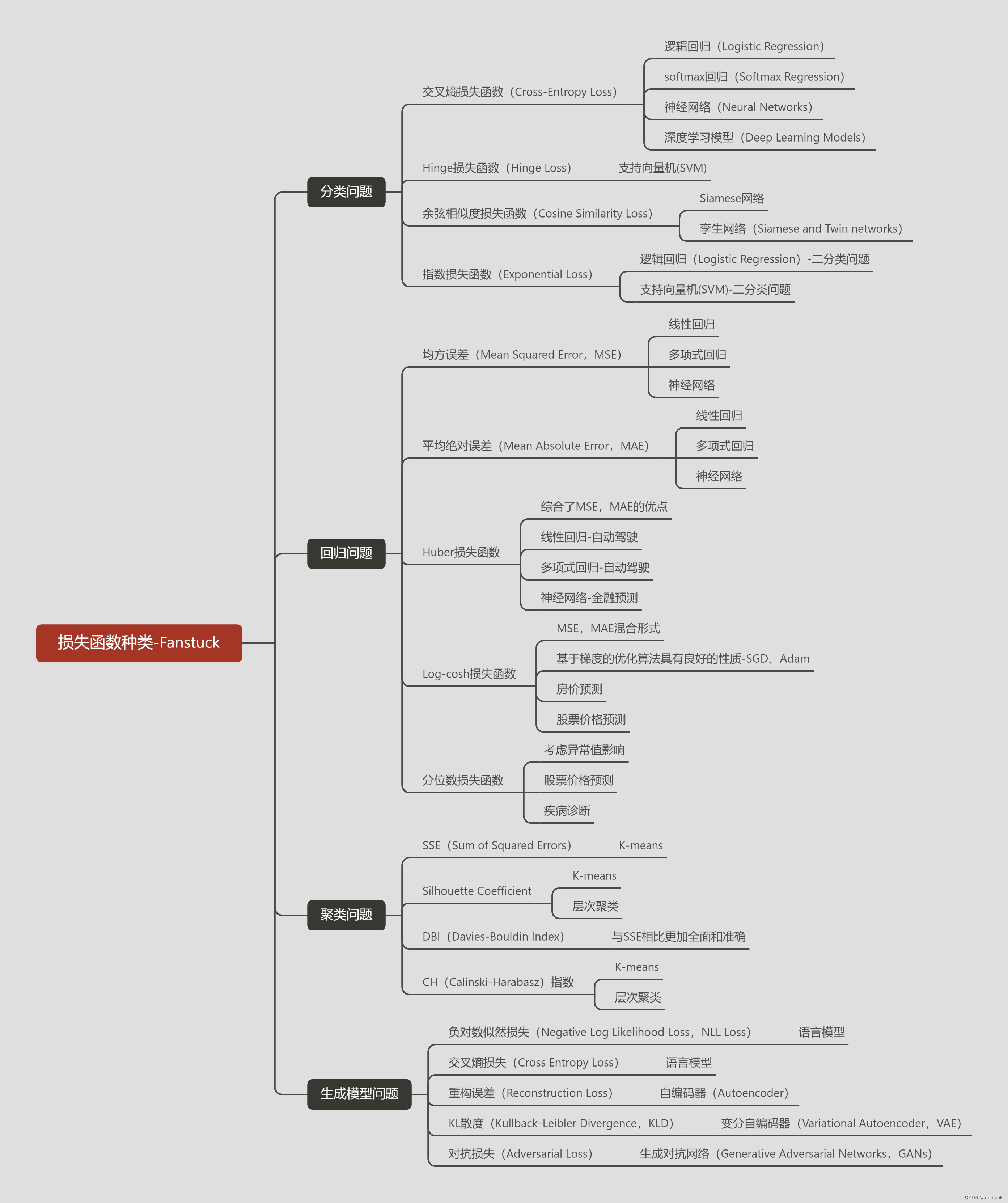

Вы используете ИИ каждый день и до сих пор не знаете, как ИИ дает обратную связь? Одна статья для понимания реализации в коде Python общих функций потерь генеративных моделей + анализ принципов расчета.

Используйте (внутренний) почтовый ящик для образовательных учреждений, чтобы использовать Microsoft Family Bucket (1T дискового пространства на одном диске и версию Office 365 для образовательных учреждений)

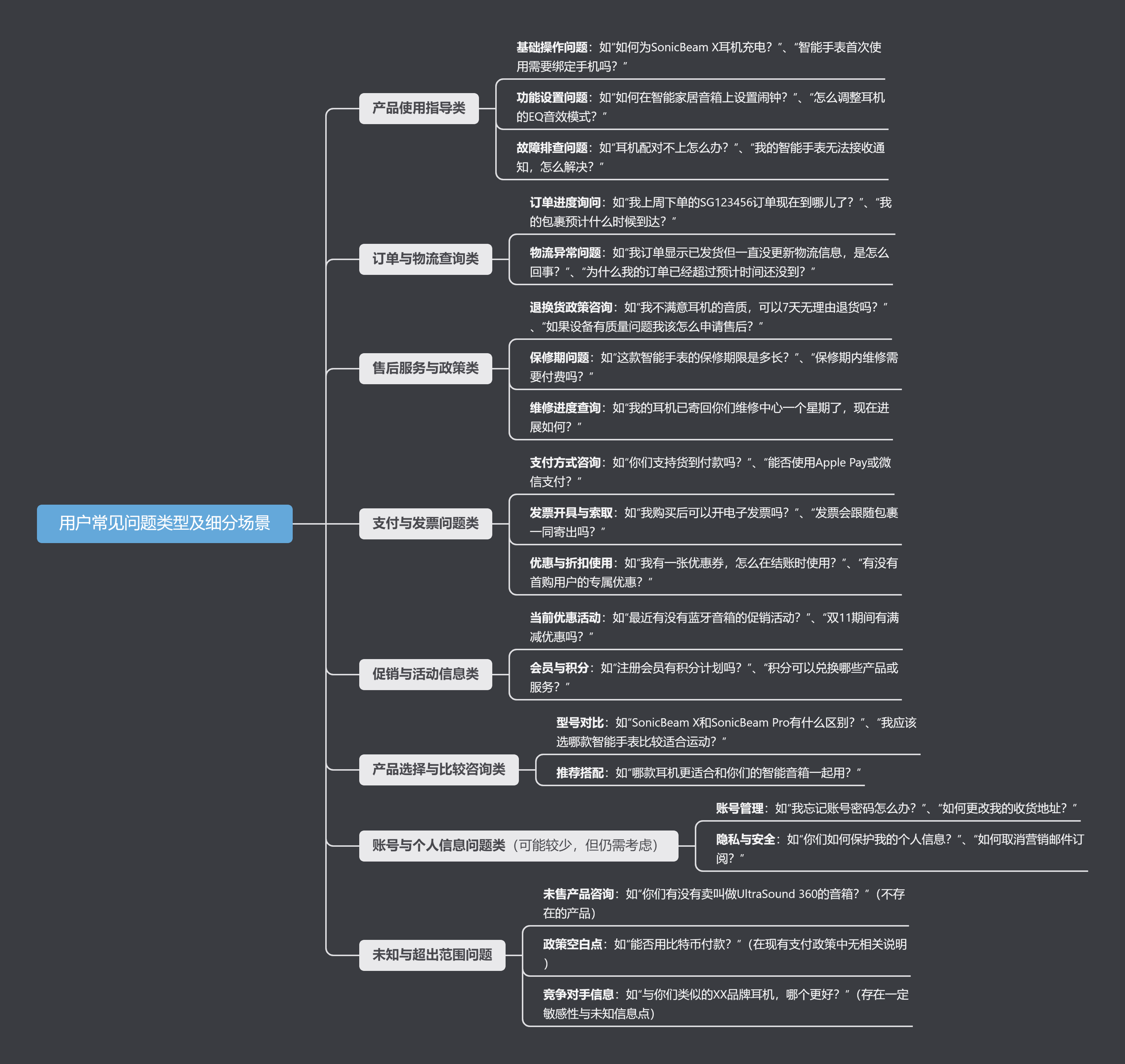

Руководство по началу работы с оперативным проектом (7) Практическое сочетание оперативного письма — оперативного письма на основе интеллектуальной системы вопросов и ответов службы поддержки клиентов

[docker] Версия сервера «Чтение 3» — создайте свою собственную программу чтения веб-текста

Обзор Cloud-init и этапы создания в рамках PVE

Корпоративные пользователи используют пакет регистрационных ресурсов для регистрации ICP для веб-сайта и активации оплаты WeChat H5 (с кодом платежного узла версии API V3)

Подробное объяснение таких показателей производительности с высоким уровнем параллелизма, как QPS, TPS, RT и пропускная способность.

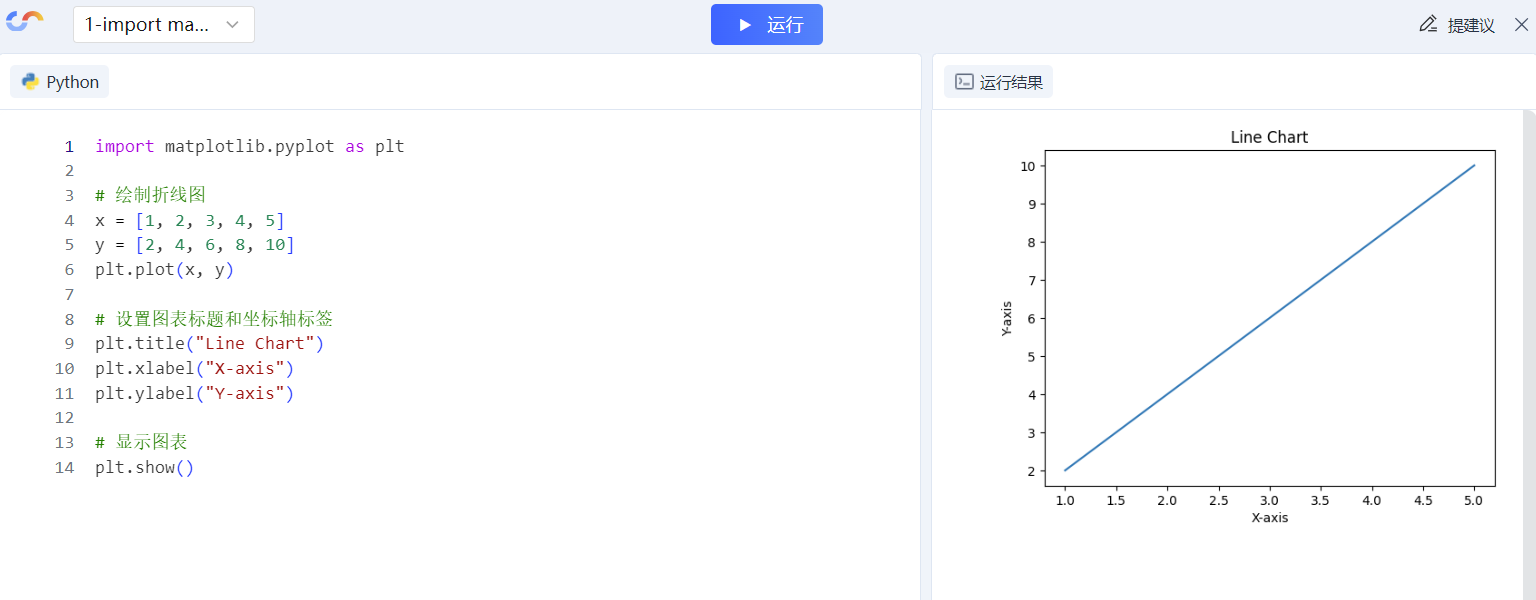

Удачи в конкурсе Python Essay Challenge, станьте первым, кто испытает новую функцию сообщества [Запускать блоки кода онлайн] и выиграйте множество изысканных подарков!

[Техническая посадка травы] Кровавая рвота и отделка позволяют вам необычным образом ощипывать гусиные перья! Не распространяйте информацию! ! !

[Официальное ограниченное по времени мероприятие] Сейчас ноябрь, напишите и получите приз

Прочтите это в одной статье: Учебник для няни по созданию сервера Huanshou Parlu на базе CVM-сервера.

Cloud Native | Что такое CRD (настраиваемые определения ресурсов) в K8s?

Как использовать Cloudflare CDN для настройки узла (CF самостоятельно выбирает IP) Гонконг, Китай/Азия узел/сводка и рекомендации внутреннего высокоскоростного IP-сегмента

Дополнительные правила вознаграждения амбассадоров акции в марте 2023 г.

Можно ли открыть частный сервер Phantom Beast Palu одним щелчком мыши? Супер простой урок для начинающих! (Прилагается метод обновления сервера)

[Играйте с Phantom Beast Palu] Обновите игровой сервер Phantom Beast Pallu одним щелчком мыши

Maotouhu делится: последний доступный внутри страны адрес склада исходного образа Docker 2024 года (обновлено 1 декабря)

Кодирование Base64 в MultipartFile

5 точек расширения SpringBoot, супер практично!

Глубокое понимание сопоставления индексов Elasticsearch.